Two-Stage Group Quizzes Part 1: What, How and Why

Posted: March 23, 2011 Filed under: Group Quizzes, Immediate Feedback Assessment Technique 22 Comments

Note: This is the first in a series of posts, and is based on my fleshing out in more detail a poster that I presented at the fantastic FFPERPS conference last week. The basic points of group exam pros and cons, and the related references borrow very heavily from Ref. 5 (thanks Brett!). All quoted feedback is from my students.

Introduction

A two-stage group exam is form of assessment where students learn as part of the assessment. The idea is that the students write an exam individually, hand in their individual exams, and then re-write the same or similar exam in groups, where learning, volume and even fun are all had.

Instead of doing this for exams, I used the two-stage format for my weekly 20-30 minute quizzes. These quizzes would take place on the same day that the weekly homework was due (Mastering Physics so they had the instant feedback there as well), with the homework being do the moment class started. I used them for the first time Winter 2011 in an Introductory Calculus-based E&M course with an enrolment of 37 students.

The format of the weekly quiz (20-30 minutes total) was as follows:

- The students wrote their 3-5 multiple-choice and short-answer question quiz individually and afterward handed these solo quizzes in.

- The group quiz (typical group size = 3) consisted of most or all of individual questions as multiple-choice questions. Most questions had five choices.

- The marks were weighted 75% for individual component and 25% for group component. The group component was not counted if it would drop an individual’s mark .

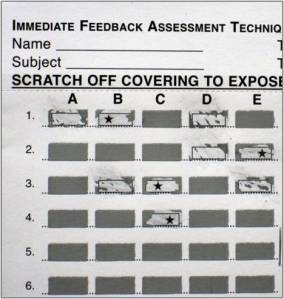

Immediate Feedback Assessment Technique (IF-AT)

The group quizzes were administered using IF-AT [1] cards, with correct answers being indicated by a star when covering is scratched off. This immediate feedback meant that the students always knew the correct answer by the end of the quiz, which was a time at which they were at their most curious, and thus the correct answer should be most meaningful to them. Typically, students that did not understand why a given answer was correct would call me over and ask for an explanation.

“I feel finding out the answers immediately after the quiz helps clarify where I went wrong instead of letting me think my answer was right over the weekend. It also works the same for right answers.”

My students found it to be a very fair marking system because they received partial marks based on their number of incorrect answers. My system was 2 points if you got it correct on the first try, and that went down by half for each wrong answer. So 1 wrong answer = 1 point credit, 2 wrong answers = 0.5 point credit, and so on.

The General Benefits of Group Exams

Peer interactions and feedback: Both students benefit when one explains an answer to the other.

“When I think I know what’s going on I can explain it and realize yes, I really do know what I’m talking about…and sometimes vice-versa.”

All students are given a good chance to participate: All students, including the shy or quiet, participate and are given a chance to explain their understanding and reasoning. I gave nine of these two-stage quizzes over the term and don’t remember seeing any students sitting on the sidelines letting the rest of their group figure it out. They were invested because their marks were on the line, and they genuinely (based on feedback) seemed to feel like it was a good opportunity for learning.

Development of collaboration skills [2]: I can’t really comment on this too much. These are not skills which I was able to notice a difference over the course of the term, but I would certainly believe that many students had some tangible level of development of these types of skills.

Students enjoy them and there is an increase in overall enjoyment of course [2]: The claim about the increase in overall enjoyment from Ref. 2 is not something I can comment on since I changed many things in this course from the first time I taught it so couldn’t pinpoint one thing which lead to higher student evaluations and a more positive classroom culture than the first time I taught this course (note this was only my second time teaching this course). But I can certainly tell you the feedback I got regarding the group quizzes was overwhelmingly positive.

“I (heart) group quizzes!!! I always learn, and it’s nice to argue out your thinking with the group. It really helps me understand. Plus, scratching the boxes is super fun.”

They promote higher-level thinking [3]: This claim from Ref. 3 is another one for which I cannot say I looked for, nor saw any evidence.

Increase in student retention of information (maybe) [4]: This is at the heart of a study which I plan to run this coming year. Ref. 4 saw a slight increase in student retention of information on questions for which students completed the group quizzes. But this study did not control for differences in time-on-task between the students that completed just the individual exams and those that complete individual and group exams. More discussion on my planned study in a future post.

Improved student learning [3,5]: I saw the same sort of evidence for this that is shown in Ref. 5. Groups of students, where none of them had the correct answer for a question on their solo quizzes, were able to get the correct answer on the group quiz nearly half the time. This shift from nobody having the correct answer to the group figuring out the correct answer is a very nice sign of learning.

Some Specific Benefits I Saw in My Implementation

The feedback comes when it is most useful to them: Immediate feedback via IF-AT provides them with correct answer when they are very receptive, after having spent time on their own and in group discussion pondering the question.

“It’s a good time to discuss and it’s the perfect time to learn, ‘cause right after the quiz, the ideas and thoughts stick to your mind. It’s gonna leave a great impression.”

Very high engagement from all students: I observed my students to be highly engaged with the questions at hand and were very excited (high-fives and other celebrations were delightfully common) when they got a challenging question correct.

Reduced student anxiety: due to (a) knowing that they could earn marks even if they were incorrect on individual portion, and (b) knowing that they would come away from the quiz knowing the correct answers. Point (a) is pure speculation on my part. Part (b) was a point made by multiple students when I asked them to provide feedback on the group quiz process.

Some Drawbacks to Group Exams

Longer exam/quiz time: This really wasn’t a big deal. It was typically less than an extra 10 minutes and the advantages were far too great to not give up that extra little bit of class time.

Some students feel very anxious about group work and interactions: This never came up in the feedback I received from the students, but I have friends and family who have discussed with me how much they dislike group work. Perhaps this specific implementation might have even been to their liking.

Social loafers and non-contributors earn same marks as the rest of the group: To my mind the potential student frustration from this was greatly moderated by all students writing the solo quizzes, as well as the group portion being worth only 25% of total quiz mark. And as I mentioned earlier, I do not remember noticing a non-contributor even once over the term.

Dominant group members can lead group astray when incorrect: This is another thing which, to my mind, the IF-AT sheets moderate greatly. Dominant group members can potentially minimize the contributions of other group members, but I do remember an “ease-your-mind” about dominant student issues point made by Jim Sibley when I first learned of IF-AT. Jim Sibley is at the University of British Columbia and is a proponent of Team-Based Learning. In this learning environment they use the IF-AT cards for reading quizzes at the start of a module. He told us that groups often go to the shy or quiet members as trusted answer sources when dominant group members are repeatedly incorrect.

References

[1] http://www.epsteineducation.com

[2] Stearns, S. (1996). Collaborative Exams as Learning Tools. College Teaching, 44, 111–112.

[3] Yuretich, R., Khan, S. & Leckie, R. (2001). Active-learning methods to improve student performance and scientific interest in a large introductory oceanography course. Journal of Geoscience Education, 49, 111–119.

[4] Cortright, R.N., Collins, H.L., Rodenbaugh D.W. & DiCarlo, S.T. (2003). Student retention of course content is improved by collaborative-group testing, Advan. Physiol. Edu. 27: 102-108.

[5]Gilley, B. & Harris, S. (2010). Group quizzes as a learning experience in an introductory lab, Poster presented at Geological Society of America 2010 Annual Meeting.

Updates

March 26, 2011 – Added: “These quizzes would take place on the same day that the weekly homework was due (Mastering Physics so they had the instant feedback there as well), with the homework being do the moment class started.”

The Science Learnification Weekly (March 13, 2011)

Posted: March 13, 2011 Filed under: Learnification Weekly, Nature of Science, Oral Assessments, Problem Solving, Standards-Based Grading Leave a commentThis is a collection of things that tickled my science education fancy in the past week or so. They tend to be clumped together in themes because an interesting thing on the internet tends to lead to more interesting things.

Moving beyond plug-n-chug Physics problems

- Dolores Gende talks about representations in Physics and how these can be used to move the student problem solving process beyond just formula hunting. Translation of representation is a very challenging task for novice Physics students and a typical end-of-chapter exercise can be made much more challenging by asking them to translate from one representation to another, such as asking them to extract “known quantities” from a graph instead of being given explicitly in the word problem. I must say that I prefer Knight over other intro texts as a source of homework and quiz problems because he has a lot of these physics exercise + translation of representation questions. Gende links to the Rutgers University PAER (Physics and Astronomy Education Research) multiple representation resources, but there are a ton of other excellent resources throughout the PAER pages.

Scientific thinking and the nature of science

- Early this past week, Chad Orzel from the Uncertain Principles blog posted three posts related to scientific thinking and the general population: Everybody Thinks Scientifically, Teaching Ambiguity and the Scientific Method,Scientific Thinking, Stereotypes, and Attitudes. I won’t even try to summarize the posts here, but one of the main messages is that letting the average person believe that science is too difficult for them is not a great idea.

- On Thursday I wrote a post which featured a couple of activities that can help teach about the nature of science. Andy Rundquist brought up in the comments the mystery tube activity which was also discussed in a recent Scientific Amaerican post which discusses that schools should teach more about how science is done.

- Habits of scientific thinking is a post from John Burk of the Quantum Progress blog . A nice discussion follows in the comments. His example habit is…

“Estimate: use basic numeric sense, unit analysis, explicit assumptions and mathematical reasoning to develop an reasonable estimate to a particular question, and then be able to examine the plausibility of that estimate based its inputs.”

- Chains of Reasoning is a post from the Newton’s Minions blog. He is trying to work on getting his physics students from information to conclusion through logical (inference) steps. I’m trying to directly, explicitly work on students in physics reasoning well. His main message for his students is one that sums up well the disconnect between the common perception of science and the true nature of science:

“Science isn’t about ‘knowing;’ it’s about being able to figure out something that you don’t know! If you can’t reason, then you’re not doing science.”

What Salman Khan might be getting right

- Mark Hammond’s first post on his Physics & Parsimony blog talks about some of the positive things that we can take away from Khan’s recent TED talk that has recently been a hotly discussed topic on the old internet. I had been paying some attention to the discussion, but didn’t actually watch the talk until after reading Hammond’s post. It is much easier to tear something apart than to do as Mark did and to pull out some important lessons. Mark’s two things that Khan is getting right are related to flipped classrooms and mastery learning, and it is important to remember that the audience being reached by this talk have mostly never heard of these education paradigms which are generally supported by the greater education reform community (myself included). I commented on mark’s blog:

“In terms of public service, I feel that he could have sold the idea of the flipped classroom as something that every teacher can do, even without his videos, but that his academy makes it even easier for teachers to implement. I’m sure this is the first time that many people have heard of a flipped classroom, and it would be nice if people understand that this is a general teaching strategy and not something brand-new that you can all of a sudden do thanks to Khan.”

Collaborative scoring on oral assessments

- Andy Rundquist posted a couple of videos showing him collaborate with students in scoring oral assessments for his upper-division Mathematical Physics course, which also happens to be his first Standards-Based Grading implementation.

Activities to Teach the Nature of Science

Posted: March 10, 2011 Filed under: Dissemination Summary, Nature of Science 7 CommentsChad Orzel from the Uncertain Principles blog recently posted a trifecta of posts discussing scientific thinking. Among other things these posts reminded me of the persistent misconception (in the media, the population at large and even among undergraduate science majors) that scientific hypotheses and theories can be proven, and of the confusion between the common usage of the word theory (“it’s just a theory”) and the scientific use of the word. Here are a couple of activities that can be used (from higher K-12 grades straight through to undergraduates) to help your class learn about the nature of science by modeling scientific inquiry at a very easy to understand level. A conversation on twitter (with @polarisdotca) reminded me that I had intended to write a quick post on these activities since I seem to talk about them fairly often.

The Game of Science

I first encountered this at a Summer AAPT (American Association of Physics Teachers) workshop run by David Maloney and Mark Masters, the authors of the Physics Teacher article “Learning the Game of Formulating and Testing Hypotheses and Theories” (The Physics Teacher, Jan. 2010, Vol. 48, Issue 1, pp. 22). You give each group in your class a “list of the moves made by two novice, but reasonably intelligent players” from when they played an abstract strategy boardgame (think games like checkers or go but way simpler in this case). The group plays out the moves of the two novice players and tries to deduce the rules of the game. The students are able to generate hypotheses (propose rules) which can be disproven by data (moves which break the rules). Further sets of rules can be given to test the students theories (the sets of rules which have survived the hypothesis testing). The links between what they are doing and hypothesis testing and theories is discussed explicitly. This activity also leads to discussions of if it is possible to prove a hypothesis or theory and how a theory, once accepted by the classroom, is quite robust. If a future list of moves for a subsequent game ended up showing that one of the small rules was wrong, it wouldn’t mean that the entire set of rules was incorrect, but instead would just mean that the set of deduced rules (the theory) would need to be slightly revised. You are also able to discuss ideas like scientific consensus, with all the groups in the room agreeing on the deduced rules and confidence in theories which withstand many tests (sets of moves lists).

I first encountered this at a Summer AAPT (American Association of Physics Teachers) workshop run by David Maloney and Mark Masters, the authors of the Physics Teacher article “Learning the Game of Formulating and Testing Hypotheses and Theories” (The Physics Teacher, Jan. 2010, Vol. 48, Issue 1, pp. 22). You give each group in your class a “list of the moves made by two novice, but reasonably intelligent players” from when they played an abstract strategy boardgame (think games like checkers or go but way simpler in this case). The group plays out the moves of the two novice players and tries to deduce the rules of the game. The students are able to generate hypotheses (propose rules) which can be disproven by data (moves which break the rules). Further sets of rules can be given to test the students theories (the sets of rules which have survived the hypothesis testing). The links between what they are doing and hypothesis testing and theories is discussed explicitly. This activity also leads to discussions of if it is possible to prove a hypothesis or theory and how a theory, once accepted by the classroom, is quite robust. If a future list of moves for a subsequent game ended up showing that one of the small rules was wrong, it wouldn’t mean that the entire set of rules was incorrect, but instead would just mean that the set of deduced rules (the theory) would need to be slightly revised. You are also able to discuss ideas like scientific consensus, with all the groups in the room agreeing on the deduced rules and confidence in theories which withstand many tests (sets of moves lists).

It is worth noting that I am an extra big sucker for the Game of Science because I am an avid boardgamer.

A preprint of the paper and sample materials for the Game of Science are available here.

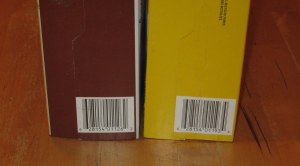

Learning About Science from Cereal Boxes

The paper “Learning About Science and Spectra from Cereal Boxes” (The Physics Teacher, Oct. 2009, Vol. 47, Issue 7, pp. 450), whose authors include Bob Beichner of SCALE-UP fame, describes an activity that is very much in the same spirit as the Game of Science. They provided students with the barcodes (with UPC) for four boxes of cereal. The students then developed some hypotheses based on the UPC codes that they had. Due to the specific codes that they were supplied they were able to hypothesize that the first set of 5 numbers in the UPC represented the manufacturer. They also hypothesized that all the UPC codes started with a 0, but were able to later disprove this hypothesis when they discovered that their textbook had a UPC code which started with a 9, prompting them to revise their hypothesis to UPC codes for food start with a 0. This activity leads to the same types of discussions surrounding the process of scientific inquiry and the development of scientific knowledge that are highlighted in the above discussion of the Game of Science.

“Learning About Science and Spectra from Cereal Boxes” (The Physics Teacher, Oct. 2009, Vol. 47, Issue 7, pp. 450), whose authors include Bob Beichner of SCALE-UP fame, describes an activity that is very much in the same spirit as the Game of Science. They provided students with the barcodes (with UPC) for four boxes of cereal. The students then developed some hypotheses based on the UPC codes that they had. Due to the specific codes that they were supplied they were able to hypothesize that the first set of 5 numbers in the UPC represented the manufacturer. They also hypothesized that all the UPC codes started with a 0, but were able to later disprove this hypothesis when they discovered that their textbook had a UPC code which started with a 9, prompting them to revise their hypothesis to UPC codes for food start with a 0. This activity leads to the same types of discussions surrounding the process of scientific inquiry and the development of scientific knowledge that are highlighted in the above discussion of the Game of Science.

They also did further activities with matching the barcodes to the UPC codes. In the post-activity discussion several groups called the UPC/barcode the product’s thumbprint and the instructors drew a parallel to spectra being unique identifiers for elements: “a way to recognize each using nothing but a set of lines in specific patterns.” Although this activity can be used to teach about the nature of science, in the authors’ implementation it also served to set up a unit on spectra.

The Physics Problem and Standards-Based Grading

Posted: March 9, 2011 Filed under: Standards-Based Grading 25 CommentsInspired by the Standards-Based Grading Borg, I am slowly putting together the picture of my own potential SBG implementation. One thing which I still have yet to sort out is how does the typical physics problem fit into a Standards-Based Grading implementation? Or to ask a slightly different question..

How does one assess a typical physics problem in a Standards-Based Grading implementation?

Note: I have not read any of the SBG literature and my exposure to SBG comes entirely from the SBG blog Borg so my questions arise from the implementations with which I am familiar.

Second note: This post is meant to start a conversation that will hopefully help me sort out how I can make Physics problems and SBG ideals happily co-exist in a course. I am not tied to the exams or any other part of my colleagues’ sections of the same intro courses that I teach, but in the end I have to show that my students are able to solve the same kind of problems that show up on my colleagues exams.

The Physics problem

I would argue, that in the typical university physics course, the physics problem is the most commonly used assessment item. They are assigned for homework, they show up on exams, students do tons of end-of-the-chapter problems to study for exams, and most college instructors use them as the primary method to teach new concepts. My intro-level courses are far from traditional from the point of view of how class time is spent (pre-lecture reading assignments, clicker questions using peer instruction, strong conceptual emphasis, lots of group work), but I still use a traditional assessment system. My students work problems on their homework assignments, in their quizzes and on their exams. Approximately 1/3 of the marks on my exams come from problems.

I am being overly generous and calling what is usually just an exercise a problem. A “physics problem” is something which requires actual problem solving on the part of the person attempting it and not just some algorithmic pattern matching based on previously seen or completed exercises. But let’s not get hung up on this distinction. Let’s just say that a physics problem is something which requires some or all of the following skills:

- Identifying relevant physics concepts and correctly applying those concepts to the situation discussed in the problem statement;

- Building a model;

- Keeping track of units;

- Procedural/mathematical execution;

- Respecting significant figure rules;

- Making and justifying estimates;

- Checking the reasonableness of answers;

- Translation of representation (for example between graphical, words, formulas, motion diagrams and/or pictorial);

- Writing up clear, coherent and systematic solutions.

I’m sure folks could come up with many others, but those are the skills that my brainstorm yielded.

Assessing a Physics problem in SBG

Let’s say that for various reasons (administration, the rest of my department, transfer status of the course to larger universities, etc), that I must have my students tackle numerous Physics problems during a course and these are problems which consistently require as many of the above-listed skills as possible. How do I assess these problems in SBG?

My colleagues would argue that most of those above-mentioned skills used when solving a problem are very important skills for a person continuing on in physics and that we should “teach those skills” to our students. I could write-up the above mentioned skills as standards. I could then have one of two primary strategies: assess most of these standards individually or assess multiple standards on each problem.

The problem with the individually assessed standards is that they are all part of the problem-solving package and assessing each of them individually doesn’t assess the coherent whole of problem solving.

With assessing multiple standards on each problem, not every standard can be present at the same level. And for some of these standards it seems as if trying to assess them from a tiny piece of an individual problem would be the same as assessing how accurate of a basketball shot somebody has based on seeing them shoot only a single free-throw.

Incorporating Physics problems into an SBG-assessed course

Now let’s say that the above is still true, I must have my students tackle Physics problems. But, for whatever reason, I can’t/don’t/won’t come up with a good way to assess them. But I want to be true to SBG and not have to just tack on 10% for homework problems or just give them an exam at the end that has problems and is worth a certain fraction of their mark while all their SBG-assessed standards are worth the remaining fraction of the their marks. I just want their grade to be some sensible reflection of their scores from the assessed standards.

How do I incorporate Physics problems into my course in this case?

A couple more quick questions

- If I am using problems to assess multiple problem-solving standards at a time, how do they earn the “exceeds expectations” levels (4/4 or 10/10) on their standards?

- The common practice in SBG seems to be to make the standard being tested nice and explicit. But having a standard like “identify relevant physics concepts” means that you have to avoid making the conceptual standards explicit with a problem. Is that good, bad, or does it matter?

The Science Learnification Weekly (March 6, 2011)

Posted: March 6, 2011 Filed under: Arduino, Flipped Classroom, Learnification Weekly, Screencast, Standards-Based Grading 2 CommentsThis is a collection of things that tickled my science education fancy in the past week or so. Some of these things may turn out to be seeds for future posts.

Screencasting in education

Last week I posted links to a couple of posts on screencasting as part of a collection of posts on flipped/inverted classrooms in higher education. Well this week I’m going to post some more on just screencasting.

- I mentioned this last week, but Robert Talbert has started a series of posts on how he makes screencasts.

- In the first post, he is kind enough to spell out exactly what a screencast is among other things.

- In the second post, he talks about the planning phase of preparing a screencast.

- Roger Freedman goes all meta on us and posts a screencast about using screencasts (well, it’s actually about his flipped a.k.a. inverted classroom and how he uses screencasts as part of that).

- Andy Rundquist talks about using screencasting to grade and provide feedback. He also gets his students to submit homework problems or SBG assessments via screencast. He has a ton of other posts on how he uses screencasting in the classroom.

- It’s unofficially official that #scast is the hashtag of choice for talking about screencasting on twitter.

- Added March 10: Mylene from the Shifting Phases blog talks about some of the nuts and bolts of preparing her screencasts including pointing out how the improved lesson planning helps her remember to discuss all the important little points.

I taught a 3rd-year quantum mechanics course last year and encouraged the students, using a very small bonus marks bribe, to read the text before coming to class. I think that due to the dense nature of the material, their preparation time would be much more productive and enjoyable if I created screencasts for the basic concepts and then had a chance to work on derivations, examples and synthesis in class. With the reading assignments they were forced to try to deal with basic concepts, synthesis, derivations and examples on their own which was asking quite a lot for their first contact with all those new ideas. I’m pretty interested to try out screencasting and

Arduino

I have been scheming for a while to bring the Arduino microprocessor (a.k.a. very small open-source computer) into my electronics courses starting with a 2nd year lab. Arduino is a favorite of home hobbyists and the greater make community.

- Phil Wagner from the Broken Airplane blog gets in even earlier than I am planning to and talks about teaching “Modern Electronics” to high school students with Arduino. He even has some tutorials if you want to try the fun out for yourself.

This is a collection of things that tickled my science education fancy in the past week or so. Some of these things may turn out to be seeds for future posts.

Standards-based grading in higher education

Standards-based grading (SBG) is a pretty hot topic in the blogosphere (SBG gala #5) and on twitter (#sbar). There’s a nice short overview of standards-based grading at the chalk|dust blog.

I am very fond of the idea of basing a student’s grade on what they can do by the end of the course instead of penalizing them for what they can’t do along the way when they are still in the process of learning. I also love the huge potential to side-step test anxiety and cramming.

Folks using this grading scheme/philosophy (a.k.a. the SBG borg) are mostly found at the high-school level, but there are some folks in higher ed implementing it as well. I am strongly considering trying out SBG in one of my future upper-division courses, such as Quantum Mechanics, but there are some implementation issues that I want to resolve before I completely sell myself on trying it out. I am in the middle of writing a post about these issues and look forward to discussing them with those that are interested.

Special thanks go to Jason Buell from the Always Formative Blog for bringing most of these higher ed SBG folks to my attention. He has a great bunch of posts on SBG implementation that fork out from this main page.

SBG implementations in higher ed:

- Andy Runquist is using collaboarative oral assessments as part of his SBG implementation. This is the only higher ed Physics implementation that I have encountered so far and I have been chatting Andy up a ton about what he is up to in his first implementation.

- Adam Glesser from the GL(s,R) blog has tons of SBG posts: He is in his first year of a full SBG implementation in his Calculus courses. He gets bonus points for being a boardgame geek and playing Zelda with his 4-year old son.

- Sue VanHattum talks about wading into the water as she slowly moves into SBG implementations by way of a mastery learning implementation. Search her blog for other SBG posts.

- Bret Benesh comes up with a new grading system for his math courses with help from the SBG borg.

- Added March 10: Mylene teaches technical school electronics courses and replaces the achievement levels for each standard with a system where the standards build on each other and are assessed using the binary yup or nope system.

That’s it for this week. Enjoy the interwebs everybody.

Robert Talbert

Open thread: What is the impact of getting students to make “real-world connections”?

Posted: March 4, 2011 Filed under: Open Thread, Real-world Connections Leave a commentThis discussion point comes from a friend of mine, Sandy Martinuk, who is working on his PhD in Physics Education Research at UBC. One of the questions that he is struggling with in his research has to do with the idea of trying to get students to make “real-world connections” in their introductory Physics courses.

So here is the discussion point:

Instructors often use examples or stories designed to help their students see connections between physics and the real world, but rarely think about exactly what they hope to achieve. What does it mean for students to make a “real-world connection”? Do these have any lasting affective or cognitive impact?

Let’s hear your thoughts mighty blogosphere and feel free to substitute your science of choice for Physics if that is where your expertise lies.

Recent Comments