Student feedback on having weekly practice quizzes (instead of homework)

Posted: February 14, 2013 Filed under: Group Quizzes, Homework, Introductory Electricity and Magnetism, Practice Quizzes, Weekly Quizzes | Tags: intro physics, practice quiz questions 5 CommentsThis term I eliminated the weekly homework assignment from my calc-based intro physics course and replaced it with a weekly practice quiz (not for marks in any way), meant to help them prepare for their weekly quiz. There’s a post coming discussing why I have done this and how it has worked, but a la Brian or Mylene, I think it can be valuable to post this student feedback.

I asked a couple of clicker questions related to how they use the practice quizzes and how relevant they find the practice quiz questions in preparing them for the real quizzes. I also handed out index cards and asked for extra comments.

Aside from changing from homework assignments to practice quizzes, the structure of my intro course remains largely the same. I get them to do pre-class assignments, we spend most of our class time doing clicker questions and whiteboard activities, and there is a weekly two-stage quiz (individual then group). I have added a single problem (well, closer to an exercise) to each weekly quiz, where in the past I would infrequently ask them to work a problem on a quiz.

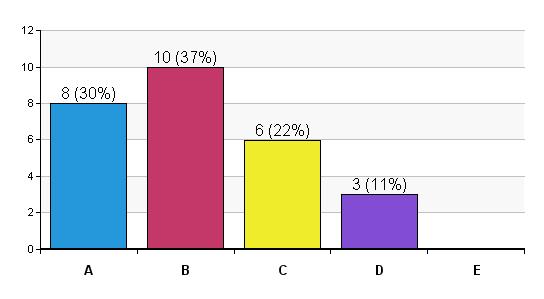

Clicker Question 1

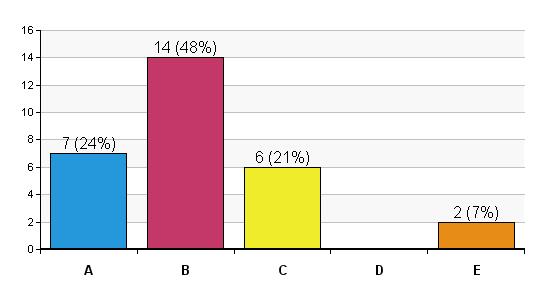

Clicker Question 2

Just from a quick scan of the individual student responses on this one, I saw that the students with the highest quiz averages (so far) tended to answer A or B, where the students with the lower quiz averages tended to answer B or C. I will look at the correlations more closely at a later date, but I find that this is a really interesting piece of insight.

Additional Written Feedback

Most of the time I ask the students for some feedback after the first month and then continue to ask them about various aspects of the course every couple of weeks. In some courses I don’t do such a great job with the frequency.

Usually, for this first round of feedback, the additional comments are dominated by frustration toward the online homework system (I have used Mastering Physics and smartPhysics), requests/demands for me to do more examples in class, and some comments on there being a disconnect between the weekly homework and the weekly quiz. As you can see below, there is none of that this time. The practice quizzes, the inclusion of a problem on each weekly quiz, and perhaps the provided learning goals, seem to do a pretty good job of communicating my expectations to them (and thus minimize their frustration).

Student comments (that were somewhat on topic)

- I feel like the practice quizzes would be more helpful if I did them more often. I forget that they have been posted so maybe an extra reminder as class ends would help.

- The wording is kind of confusing then I over think things. I think it’s just me though. Defining the terms and the equations that go with each question help but the quizzes are still really confusing…

- Curveball questions are important. Memorize concepts not questions. Changes how students approach studying.

- The group quizzes are awesome for verbalizing processes to others. I like having the opportunity to have “friendly arguments” about question we disagree on

- I love the way you teach your class Joss! The preclass assignments are sometimes annoying, but they do motivate me to come to class prepared

- I enjoy this teaching style. I feel like I am actually learning physics, as opposed to just memorizing how to answer a question (which has been the case in the past).

- I really enjoy the group quiz section. It gets a debate going and makes us really think about the concepts. Therefore making the material stick a lot better.

Last thought: With this kind of student feedback, I like to figure out a couple of things that I can improve or change and bring them back to the class as things I will work on. It looks like I will need to ask them a weekly feedback question which asks them specifically about areas of potential improvement in the course.

Student opinions on what contributes to their learning in my intro E&M course

Posted: March 23, 2012 Filed under: Clickers, Flipped Classroom, Group Quizzes, Homework, Immediate Feedback Assessment Technique, Inverted Classroom, Quiz Correction Assignments, Whiteboards 5 CommentsWe are a couple of weeks away from our one and only term test in my intro calc-based electricity and magnetism course. This test comes in the second last week of the course and I pitch it to them as practicing for the final. This term test is worth 10-20% of their final grade and the final exam 30-40% of their final grade and these relative weights are meant to maximize the individual student’s grade.

Today I asked them how they feel the major course components are contributing to their learning:

How much do you feel that the following course component has contributed to your learning so far in this course?

This is a bit vague, but I told them to vote according to what contributes to their understanding of the physics in this course. It doesn’t necessarily mean what makes them feel the most prepared for the term test, but if that is how they wanted to interpret it, that would be just fine.

For each component that I discuss below, I will briefly discuss how it fits into the overall course. And you should have a sense of how the whole course works by the end.

The smartphysics pre-class assignments

The pre-class assignments are the engine that allow my course structure to work they way I want it to and I have been writing about them a lot lately (see my most recent post in a longer series). My specific implementation is detailed under ‘Reading assignments and other “learning before class” assignments’ in this post. The quick and dirty explanation is that, before coming to class, my students watch multimedia prelectures that have embedded conceptual multiple-choice questions. Afterward they answer 2-4 additional conceptual multiple-choice questions where they are asked to explain the reasoning behind each of their choices. They earn marks based on putting in an honest effort to explain their reasoning as opposed to choosing the correct answer. Then they show up to class ready to build on what they learned in the pre-class assignment.

Smartphysics pre-class assignments

A) A large contribution to my learning.

B) A small contribution to my learning, so I rarely complete them.

C) A small contribution to my learning, but they are worth marks so I complete them.

D) No contribution to my learning, so I rarely complete them.

E) No contribution to my learning, but they are worth marks so I complete them

The smartphysics online homework

The homework assignments are a combination of “Interactive Examples” and multi-part end-of-chapter-style problems.

The Interactive Examples tend to be fairly long and challenging problems where the online homework system takes the student through multiple steps of qualitative and quantitative analysis to arrive at the final answer. Some students seem to like these questions and others find them frustrating because they managed to figure out 90% of the problem on their own but are forced to step through all the intermediate guiding questions to get to the bit that is giving them trouble.

The multi-part end-of-chapter-style problems require, in theory, conceptual understanding to solve. In practice, I find that a lot of the students simply number mash until the correct answer comes out the other end, and then they don’t bother to step back and try to make sure that they understand why that particular number mashing combination gave them the correct answer. The default for the system (which is the way that I have left it) is that they can have as many tries as they like for each question and are never penalized as long as they find the correct answer. This seems to have really encouraged the mindless number mashing.

This is why their response regarding the learning value of the homework really surprised me. A sufficient number of them have admitted that they usually number mash, so I would have expected them not to place so much learning value on the homework.

The online smartphysics homework/b>

A) A large contribution to my learning.

B) A small contribution to my learning, so I rarely complete them.

C) A small contribution to my learning, but it is worth marks so I complete it.

D) No contribution to my learning, so I rarely complete it.

E) No contribution to my learning, but it is worth marks so I complete it

Studying for quizzes and other review outside of class time

Studying for quizzes and other review outside of class time

A) A large contribution to my learning.

B) A small contribution to my learning, but I do it anyway.

C) A small contribution to my learning so I don’t bother.

D) No contribution to my learning so I don’t bother.

Group quizzes

I have an older post that discusses these in detail, but I will summarize here. Every Friday we have a quiz. They write the quiz individually, hand it in, and then re-write the same quiz in groups. They receive instant feedback on their group quiz answers thanks to IF-AT multiple-choice scratch-and-win sheets and receive partial marks based on how many tries it took them to find the correct answer. Marks are awarded 75% for the individual portion and 25% for the group portion OR 100% for the individual portion if that would give them the better mark.

The questions are usually conceptual and often test the exact same conceptual step needed for them to get a correct answer on one of the homework questions (but not always with the same cover story). There are usually a lot of ranking tasks, which the students do not seem to like, but I do.

Group Quizzes

A) A large contribution to my learning.

B) A small contribution to my learning.

C) They don’t contribute to my learning.

Quiz Corrections

I have an older post that discusses these in detail, but I will again summarize here. For the quiz correction assignments they are asked, for each question, to diagnose what went wrong and then to generalize their new understanding of the physics involved. If they complete these assignments in the way I have asked, they earn back half of the marks they lost (e.g. a 60% quiz grade becomes 80%).

I am delighted to see that 42% of them find that these have a large contribution to their learning. The quizzes are worth 20% of their final grade, so I would have guessed that their perceived learning value would get lost in the quest for points.

Quiz Corrections

A) A large contribution to my learning.

B) A small contribution to my learning, so I rarely complete them.

C) A small contribution to my learning, but they are worth marks so I complete them.

D) No contribution to my learning, so I rarely complete them.

E) No contribution to my learning, but they are worth marks so I complete them.

In-class stuff

I am a full-on interactive engagement guy. I use clickers, in the question-driven instruction paradigm, as the driving force behind what happens during class time. Instead of working examples at the board, I either (A) use clicker questions to step the students through the example so that they are considering for themselves each of the important steps instead of me just showing them or (B) get them to work through examples in groups on whiteboards. Although I aspire to have the students report out there solutions in a future version of the course (“board meeting”), what I usually do when they work through the example on their whiteboards is wait until the majority of the groups are mostly done and then work through the example at the board with lots of their input, often generating clicker questions as we go.

The stuff we do in class

A) A large contribution to my learning.

B) A small contribution to my learning.

C) It doesn't contribute to my learning.

The take home messages

Groups quizzes rule! The students like them. I like them. The research tells us they are effective. Everybody wins. And they only take up approximately 10 minutes each week.

I need to step it up in terms of the perceived learning value of what we do in class. That 2/3rds number is somewhere between an accurate estimate and a small overestimate of the fraction of the students in class that at any moment are actively engaged with the task at hand. This class is 50% larger than my usual intro courses (54 students in this case) and I have been doing a much poorer job than usual of circulating and engaging individual students or groups during clicker questions and whiteboarding sessions. The other 1/3 of the students are a mix of students surfing/working on stuff for other classes (which I decided was something I was not going to fight in a course this size) and students that have adopted the “wait for him to tell us the answer” mentality. Peter Newbury talked about these students in a recent post. I have lots of things in mind to improve both their perception and the actual learning value of what is happening in class. I will sit down and create a coherent plan of attack for the next round of courses.

I’m sure there are lots of other take home messages that I can pluck out of these data, but I will take make victory (group quizzes) and my needs improvement (working on the in class stuff) and look forward to continuing to work on course improvement.

My revised quiz reflection assignment and the new homework reflection assigment

Posted: September 1, 2011 Filed under: Homework, Quiz Correction Assignments 12 Comments One of my very first posts on this blog discussed the quiz correction assignments that I use in my intro calc-based physics courses. I have since renamed them to quiz feedback assignments to better represent what I want the students to get out of these assignments. I would actually prefer to name them reflection assignments, but I feel like too many of the first-year science majors would frown on a word which sounds as touchy-feely as reflection so I settled on the likely to be more palatable “feedback”.

One of my very first posts on this blog discussed the quiz correction assignments that I use in my intro calc-based physics courses. I have since renamed them to quiz feedback assignments to better represent what I want the students to get out of these assignments. I would actually prefer to name them reflection assignments, but I feel like too many of the first-year science majors would frown on a word which sounds as touchy-feely as reflection so I settled on the likely to be more palatable “feedback”.

Today Kelly O’Shea posted about her experience with test corrections before and after implementing SBG in her classroom. I’m still a year away from my own SBG implementation, but her post got me thinking about an overdue change in my quiz feedback assignments and about introducing this type of assignment for homework as well.

Not all quiz mistakes lead to productive reflections

My quizzes are dominated by clicker-like conceptual multiple-choice questions and short answer questions which require a combination of translation of representation and short answer questions which one could consider to be analogous to an important step in a longer problem. The issue on the short answer questions is that I see a decent number of clerical errors (missing units, silly arithmetic errors, etc) where the student gains no further physics understanding by completing the full process that I ask for the quiz reflection assignments. So I added a clerical error category to the assignment that asks them to correct their clerical error and show how it leads to the correct answer instead of doing the full-blown diagnosis and generalization that I ask for the conceptual errors. The handout that I provide for the students is included in the post if you want more details.

Reflection assignments for homework

For my 3rd year (first four chapters of Grffiths) quantum mechanics course, I am going to offer the reflection assignments to my students for their homework as well as their quizzes. My plan is to mark each part of each homework question according to the following simple scheme:

- Correct (full marks) – The solution and/or requested explanations are complete and correct. You will not be penalized for one or two small mechanical errors such as dropping a negative sign or a factor of 2pi.

- Complete (half marks) – The solution and/or requested explanations are complete, but not entirely correct.

- Incomplete (no marks) – The solution and/or requested explanations are not complete. Examples include a correct solution without the requested explanation, partially completed solutions, and solutions which jump over important steps.

Quiz Feedback Assignment Handout

Quiz Feedback Assignment (Version 2)

Last updated Sept 1, 2011 (Joss Ives)

Our quizzes are designed to be both a learning experience and an assessment of your current level of understanding of the material. For both these reasons, I offer you the opportunity to learn even more and to improve your quiz score by carefully reflecting on your performance to learn from it. Completing this assignment appropriately within two days of the quiz being returned will allow you to increase your quiz score by half of the points that you missed. This is an all-or-nothing assignment. It is intended only for those students who are interested in making a serious effort to improve their understanding. If it is incomplete or not done well, you will not receive any additional points. Late quiz feedback assignments can be submitted any time up until the date of the final exam, but will only earn back one quarter (instead of one half) of the points that you missed.

Please make sure to attach your quiz paper so I know what you are talking about. You can write or type your quiz corrections, but please put them on a separate sheet from the original quiz.

Types of Errors

For the purpose of this assignment I have divided the common types of errors into conceptual errors and clerical errors. These require slightly different correction processes, and these process are explained below. If your error seems to lie in some grey area between conceptual and clerical error, treat it as a conceptual error.

Conceptual Errors

These errors represent mistakes in your thinking, mistakes in setting up the problem, mistakes in translation of representation or incomplete understanding of concepts. Translation of representation is when you need to take information from one representation (such as word descriptions, graphs, motion diagrams, symbolic equations) and translate this information to another one of these representations. Incorrectly labeling a negative value as positive, grossly misreading a value off a graph or accidentally swapping your initial and final conditions are all considered conceptual errors in the translation of representation category.

To receive credit for your conceptual error feedback, you need to address the following two phases for each question or problem for which you did not receive full credit. See detailed description of each below:

1) Diagnosis Phase (DP) – Identify what went wrong.

In this phase you need to correctly identify your errors, and diagnose the nature of your difficulties as they relate to specific physics principles or concepts, a problem solving procedure, or beliefs about the nature of science and learning science.

Please note that an incorrect diagnosis or a merely descriptive work (such as simply noting the places where you made mistakes) is unacceptable. You need to analyze your thinking behind your mistakes, and explain the nature of these difficulties. Hence, in this phase you need to identify why you answered the way you did, where your understanding might have been weak, what you found difficult, what knowledge or skills you were missing that prevented you from correctly completing the solution, etc.

Poor Diagnosis – No description of thinking behind difficulty

- “I was confused.”

- “I thought it would be 5 N.”

- “I picked the wrong equation.”

- “I didn’t remember to use F=ma.”

Good Diagnosis – Focuses on reasons for actions

- “I thought that the larger velocity would mean the larger force.”

- “I knew it was angular momentum, but I didn’t apply it correctly – I neglected the angular momentum of the ball about the pivot point of the rod.”

2) Generalization Phase (GP) – Learn from your mistakes by generalizing beyond the specific problem.

In this phase you need to identify what deeper physics understanding you have gained from your diagnosis. By carefully thinking about the particular aspects that were problematic to you in approaching the question/problem, and correlating them with the correct solution, you should develop a better understanding of the basic physics principles. In your writing you should identify this new understanding and describe how it will prevent you from having similar problems in the future. Please note that merely stating the correct solution, by copying or paraphrasing another student’s solution for a question is unacceptable. You are expected to generalize beyond the specific problem to discuss the general principles of physics.

In your writing you are very welcome to identify not only your understanding of your mistakes, but also your appreciation for the aspects of your thinking that were already correct and successful in your original attempt. It is hoped that you will hold on to the good elements you already have and add new good ones by completing the feedback.

Poor Generalization – Focuses on generic activity

- “I learned to read the question carefully”

- “I learned to pick the right equations before solving a problem”

Poor Generalization – Focuses on the specific problem

- “I learned that the amount of work from A to B is the same as the amount of work from B to C.”

Good Generalization – Generalizes beyond the specific problem

- “I learned that the acceleration does not depend on the velocity. This is consistent with Newton’s Second Law which says that the acceleration depends only on the net force and the total mass.”

Clerical Errors

Clerical errors are those where you answered the question incorrectly in a way that was not due to a lack of physics understanding and where it is not reasonable to expect that you would be able to improve your physics understanding or mathematical fluency by learning from your mistakes. Examples of clerical errors include: your answer being incorrect due to a silly math error (accidental extra factor of 10 from a unit conversion, obvious arithmetic errors), forgetting to include units on your final answer, or making a mistake due to not reading the question carefully. These are errors where completing the Generalization Phase seems unproductive because the only thing sensible to write would be something along the lines of “I learned to read the question carefully” or “I need to be more careful of my arithmetic and always double-check my solutions.” Remember that errors in translation of representation are not considered to be clerical errors.

For clerical errors I ask you only to do one phase, the Correction Phase (CP). In this phase you identify your clerical error, how it led to your incorrect answer and how the corrected clerical error leads to a correct answer.

Acknowledgements: The quiz feedback assignment was originally developed by Charles Henderson (Western Michigan University) and Kathleen Harper (The Ohio State University) and much of the wording is theirs or is based on theirs.

The Science Learnification (Almost) Weekly – May 30, 2011

Posted: May 30, 2011 Filed under: Arduino, Flipped Classroom, Homework, Learnification Weekly, Oral Assessments, Science Journalism, Standards-Based Grading Leave a commentThis is a collection of things that tickled my science education fancy in the past couple of weeks or so

Facilitating student discussion

- Facilitating Discussion with Peer Instruction: This was buried somewhere in my to post pile (the post is almost a month old). The always thoughtful Brian Frank discusses a couple of things that most of us end up doing that are counter-productive when trying to facilitate student discussion. Buried in the comments he adds a nice list of non-counter-productive things the facilitator can say in response to a student’s point to help continue the discussion.

Dear Mythbusters, please make your data and unused videos available for public analysis

- An open letter to Mythbusters on how to transform science education: John Burk shares his thoughts with the Mythbusters on the good they are doing for science education and the public perception of science (and scientists) and then goes one step further and asks them to share their raw experimental data and video for all their experiments and trials, failed and successful. Worth noting is that Adam Savage is very active in the skeptical movement, a group of folks that consider science education to be a very high priority.

End of year reflections

Well it is that time of the year when classes are wrapping up and folks are reflecting on the year. Here are a couple of such posts.

- Time for New Teaching Clothes: SBG Reflections: Terie Engelbrecht had a handful of reflection posts over the past couple of weeks. In this post she does a nice job of reminding us that for any sort of unfamiliar-to-students instructional strategy that we need to communicate to the students WHY we have chosen to use this strategy. And this communication needs to happen early (as in first day) and be re-communicated often (since the first day is a murky blur to most of them). On a personal side note I spend most of my first day of class communicating to my students that the instructional strategies I use were chosen to (the best of my abilities and knowledge) best help them learn because I care about their learning. Earlier this month I had a parent tell me that after the first day of class her daughter came home very excited about my class because of my message about my caring about her learning. I couldn’t have smiled bigger.

- Thoughts on the culture of an inverted classroom: Robert Talbert discusses what is essentially a buy-in issue, with his end-of-term feedback showing 3/4 of his students seeing the value of his flipped/inverted classroom approach. This number is pretty consistent with my own experience, where I am judging the buy-in by the fraction of students that complete their pre-lecture assignments. He makes a nice point at the end that students used to an inverted classroom would probably be much more appalled with a regular lecture course than vice-versa.

- “Even our brightest students…” Part II: Michael Rees writes about his own (student) perspective on Standards-Based Grading. We need more of these student perspective on education blogs, they are fantastic.

An experiment in not using points in the classroom

- Pointslessness: An Experiment in Teenage Psychology: Shawn Cornally ran a bioethics class where their work for almost the entire year did not count toward their grade and they discussed readings and movies which were “interesting” (not sure what was used to qualify these things as interesting, but when looking through the list I’m pretty sure I would find most of those things interesting). Without the marks attached the students engaged in the discussions for the sake of engaging in the discussions and those students that usually try to glean what is going on from only the classroom discussions (instead of doing the readings themselves) would often go and do the readings after the discussions.

Effective communication of physics education research

- Get the word out: Effective communication of physics education research: Stephanie Chasteen posts and discusses her fantastic talk from the Foundation and Frontiers of Physics Education Research – Puget Sound conference (FFPER and FFPER-PS are by far my favorite conferences btw). The talk discussed the generally poor job that physics education researchers do of communicating with the outside world and discussed some strategies to become more effective in this communication.

A few more posts of interest

- It is just fine to give a quiz based on the homework that’s due today: Agreed! I do it too, but I use online homework that provides instant feedback so they show up in class having already received some feedback on their understanding.

- Why Schools Should Embrace the Maker Movement: I’m hoping to develop an upper-year electronics course based on Arduino, and requiring only intro computer science and physics as prerequisites. Go Makers!

- Probing potential PhDs: One of a grad student’s responsibilities is typically to be a teaching assistant and some folks at Stony Brook are taking this into account when interviewing potential new grad students by asking them to explain, at an undergraduate level, the answer to conceptual challenge problems. I think I want this collection of challenge problems for my own use.

The Science Learnification Weekly (April 18, 2011) – Homework Special Edition

Posted: April 18, 2011 Filed under: Homework, Learnification Weekly 4 CommentsThis is a collection of things that tickled my science education fancy in the last week or so. My internet was down for most of the week so this week it will all be on one topic, homework.

Cramster articles in The Physics Teacher

Over the past two months Michael Grams has published two articles on Cramster in the American Association of Physics Teachers publication The Physics Teacher. Cramster is a website where students can go to get poorly worked out solutions to textbook problems (more on this later) or get live help from “experts”. It has a tiered membership where people have some access for free but have to pay to have full access.

- Cramster: Friend or Foe (free article)? Grams discusses the website, and what some of his previous students and fellow educators think about it. He also sets up his experiment which is discussed in the second article.

- The Cramster Conclusion (not a free article): His research question is “Could giving students the answers to their assigned homework problems be an effective way of teaching them physics?” His students were provided with both a full membership to Cramster, and two days after homework assignments were given, the full textbook solutions to those problems. The homework was never collected nor graded, but an in-class quiz consisting of a numerical problem similar to one of the homework problems was given to follow-up on each assignment. He used common diagnostic conceptual surveys (Force Concept Inventory in his Mechanics sections and Conceptual Survey of Electricity and Magnetism in his Electricity and Magnetism sections), administered some exit surveys on their use of the solutions and had some follow-up student interviews. The most interesting findings (to my mind) were (1) that students greatly preferred the textbook solutions over the Cramster ones, and (2) the students with the best performance on the conceptual diagnostic surveys typically focused a lot of their attention on the “reasoning” part of the textbook solution when they used these solutions, where the students that did poorly on the conceptual diagnostic surveys completely ignored the “reasoning” parts of the solutions and just focused on the mechanical steps of the solution. The students found that the Cramster solutions often had errors, huge gaps in logic, and lacked any sort of reasoning steps, which is what made the textbook solutions their preference. This article really would have benefited from using graphs as its main method of communicating data instead of gigantic tables and endless numbers within the body of the article.

More challenges to standard assign and grade homework practices

- The Grading Dilemma: What’s Effort Worth? Shawn Cornally (Think Thank Thunk blog): “You can stop grading things. You can alleviate your students’ performance anxiety and points addiction by allowing them to engage with things purely for the fun of it. I’m so angry right now for how hippy-dippy this must be coming across.” Go read it.

- What does education research really tell us? Alfie Kohn (author of The Homework Myth and many other books) talks about three traditional teaching practices: behavior change through rewards, assigning homework, and teaching by telling, and discusses research which challenges common practices and/or findings from smaller previous studies. When looking at influence of homework on learning when factoring in other things such as instruction and motivation, researchers found “Homework no longer had any meaningful effect on achievement at all, even in high school.”

Randomized numbers in pencil and paper assignments (or quizzes/exams)

- DIY personalized, randomized assignments: Ed Hitchcock (TeachSciene.net blog) discusses how he uses some standard M$ office programs to make student assignments that have randomized numbers so none of the students have the exact same question, meaning that their discussions of homework can focus more on the process than only the solutions. This randomization is common for online homework software such as Mastering, WebAssign, LON-CAPA, etc, but it’s nice to see a quick way to take this into the pencil-and-paper question realm.

Recent Comments