Workshop slides for Gilley & Ives “Two Stage Exams: Learning Together?”

Posted: May 4, 2017 Filed under: Group Quizzes Leave a commentToday Brett Gilley and I ran a workshop “Two Stage Exams: Learning Together?” at the University of British Columbia Okanagan Learning Conference. Great fun was had and many ideas exchanged. Slides below.

Gilley Ives – Two Stage Exam Workshop – UBCO Learning Conference – 2017-05-04

AAPTSM14/PERC2014: Measuring the Learning from Two-Stage Collaborative Group Exams

Posted: August 3, 2014 Filed under: Group Quizzes 4 CommentsIn an attempt to get back into semi-regular blogging, I am setting aside my pile have half-written posts and am going to share the work that I presented at the 2014 AAPT Summer Meeting and PERC 2014.

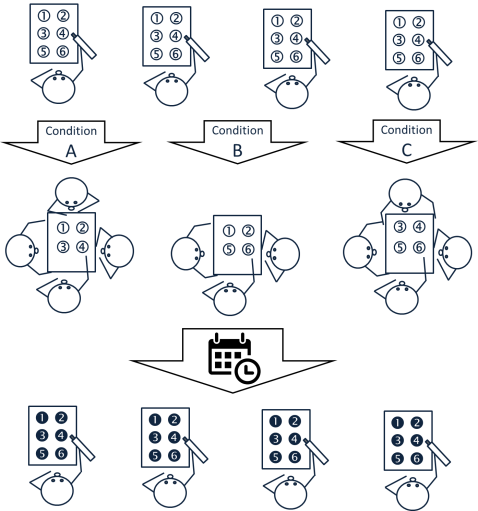

The quick and dirty version is that I was able to run a study, looking at the effectiveness of group exams, in a three-section course which enrolled nearly 800 students. The image below summarizes the study design, which was repeated for each of the two midterms.

(First row) The students all take/sit/write their exams individually. (Second row) After all the individual exams have been collected, they self-organize into collaborative groups of 3-4. There were three different versions of the group exam (conditions A, B and C), each having a different subset of the questions from the individual exam. It was designed so that, for each question (1-6), 1/3 of the groups would not see that question on their group exam (control) and the other 2/3rds would (treatment). (Bottom row) They were retested on the end-of-term diagnostic using questions which matched, in terms of application of concept, the original 6 questions from the midterm.

Results: As previously mentioned, I went through this cycle for each of the two midterms. For midterm 2, which took place only 1-2 weeks prior to the end-of-term diagnostic, students that saw a given question on their group exam outperformed those that did not on the matched questions from the end-of-term diagnostic. Huzzah! However, for the end-of-term diagnostic questions matched with the midterm 1 questions, which took place 6-7 weeks prior to the end-of-term diagnostic, there were no statistically significant differences between those that saw the matched questions on their group exams vs. not. The most likely explanation is that the learning from both control and treatment decays over time, thus so does the difference between these groups. After 1-2 weeks, there is still a statistically significant difference, but after 6-7 weeks there is not. It could also be differences in the questions associated with midterm 1 vs midterm 2. For some of the questions, it is possible that the concepts were not well separated enough within the questions so group exam discussions may have helped them improve their learning of concepts for questions that weren’t on their particular group exam. I hope to address these possibilities in a study this upcoming academic year.

Will I abandon this pedagogy? Nope. The group exams may not provide a measurable learning effect which lasts all the way to the end of the term for early topics, but I am more than fine with that. There is a short-term learning effect and the affective benefits of the group exams are extremely important:

- One of the big ones is that these group exams are effectively Peer Instruction in an exam situation. Since we use Peer Instruction in this course, this means that the assessment and the lecture generate buy-in for each other.

- There are a number of affective benefits, such as increased motivation to study, increased enjoyment of the class, and lower failure rates, which have been shown in previous studies (see arXiv link to my PERC paper for more on this). Despite my study design, which had the students encountering different subsets of the original question on their group exams, all students participated in the same intervention from the perspective of the affective benefits.

I had some great conversations with old friends, new friends and colleagues. I hope to expand on some of the above based on these conversations and feedback from the referees on the PERC paper, but that will be for another post.

Student feedback on having weekly practice quizzes (instead of homework)

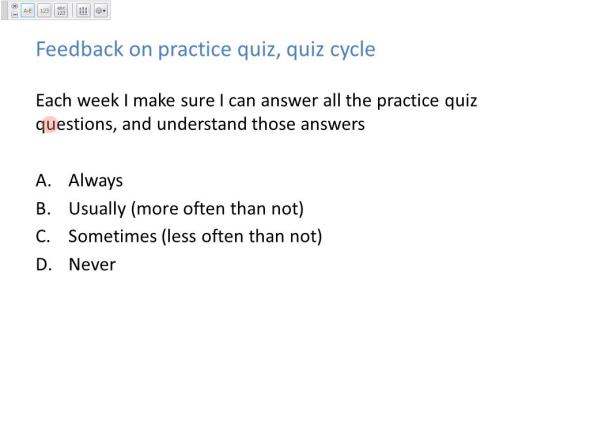

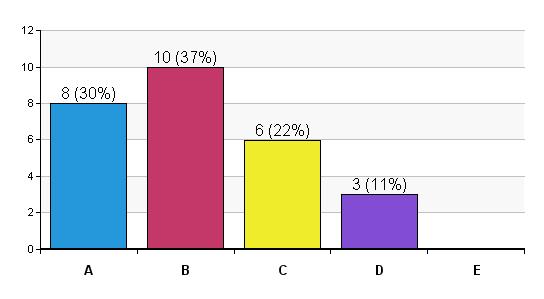

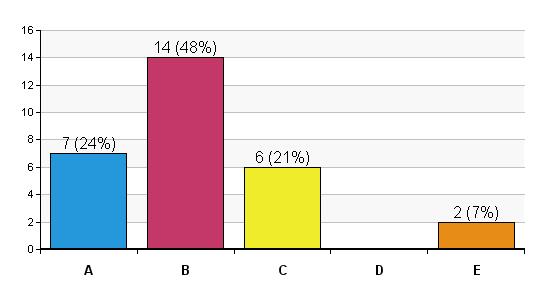

Posted: February 14, 2013 Filed under: Group Quizzes, Homework, Introductory Electricity and Magnetism, Practice Quizzes, Weekly Quizzes | Tags: intro physics, practice quiz questions 5 CommentsThis term I eliminated the weekly homework assignment from my calc-based intro physics course and replaced it with a weekly practice quiz (not for marks in any way), meant to help them prepare for their weekly quiz. There’s a post coming discussing why I have done this and how it has worked, but a la Brian or Mylene, I think it can be valuable to post this student feedback.

I asked a couple of clicker questions related to how they use the practice quizzes and how relevant they find the practice quiz questions in preparing them for the real quizzes. I also handed out index cards and asked for extra comments.

Aside from changing from homework assignments to practice quizzes, the structure of my intro course remains largely the same. I get them to do pre-class assignments, we spend most of our class time doing clicker questions and whiteboard activities, and there is a weekly two-stage quiz (individual then group). I have added a single problem (well, closer to an exercise) to each weekly quiz, where in the past I would infrequently ask them to work a problem on a quiz.

Clicker Question 1

Clicker Question 2

Just from a quick scan of the individual student responses on this one, I saw that the students with the highest quiz averages (so far) tended to answer A or B, where the students with the lower quiz averages tended to answer B or C. I will look at the correlations more closely at a later date, but I find that this is a really interesting piece of insight.

Additional Written Feedback

Most of the time I ask the students for some feedback after the first month and then continue to ask them about various aspects of the course every couple of weeks. In some courses I don’t do such a great job with the frequency.

Usually, for this first round of feedback, the additional comments are dominated by frustration toward the online homework system (I have used Mastering Physics and smartPhysics), requests/demands for me to do more examples in class, and some comments on there being a disconnect between the weekly homework and the weekly quiz. As you can see below, there is none of that this time. The practice quizzes, the inclusion of a problem on each weekly quiz, and perhaps the provided learning goals, seem to do a pretty good job of communicating my expectations to them (and thus minimize their frustration).

Student comments (that were somewhat on topic)

- I feel like the practice quizzes would be more helpful if I did them more often. I forget that they have been posted so maybe an extra reminder as class ends would help.

- The wording is kind of confusing then I over think things. I think it’s just me though. Defining the terms and the equations that go with each question help but the quizzes are still really confusing…

- Curveball questions are important. Memorize concepts not questions. Changes how students approach studying.

- The group quizzes are awesome for verbalizing processes to others. I like having the opportunity to have “friendly arguments” about question we disagree on

- I love the way you teach your class Joss! The preclass assignments are sometimes annoying, but they do motivate me to come to class prepared

- I enjoy this teaching style. I feel like I am actually learning physics, as opposed to just memorizing how to answer a question (which has been the case in the past).

- I really enjoy the group quiz section. It gets a debate going and makes us really think about the concepts. Therefore making the material stick a lot better.

Last thought: With this kind of student feedback, I like to figure out a couple of things that I can improve or change and bring them back to the class as things I will work on. It looks like I will need to ask them a weekly feedback question which asks them specifically about areas of potential improvement in the course.

Summer 2012 Research, Part 1: Immediate feedback during an exam

Posted: July 25, 2012 Filed under: Group Quizzes, Immediate Feedback Assessment Technique 2 CommentsOne of my brief studies, based on data from a recent introductory calculus-based course, was to look at the effect of immediate feedback in an exam situation. The results show that, after being provided with immediate feedback on their answer to the first of two questions which tested the same concept, students had a statistically significant improvement in performance on the second question.

Although I used immediate feedback for multiple questions on both the term test and final exam in the course, I only set up the experimental conditions discussed below for one question.

The question

The question I used (Figure 1) asked about the sign of the electric potential at two different points. A common student difficulty is to confuse the procedures of finding electric potential (a scalar quantity) and electric field (a vector quantity) for a given charge distrubution. The interested reader might wish to read a study by Sayre and Heckler (link to journal, publication page with direction link to pdf).

Figure 1. Two insulating bars, each of length L, have charges distributed uniformly upon them. The one on the left has a charge +Q uniformly distributed on it and the one on the right has a charge -Q uniformly distributed on it. Assume that V=0 at a distance that is infinitely far away from these insulating bars. Is the potential positive, negative or zero at point A? At point B?

Experimental design and results

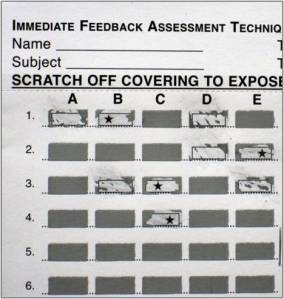

There were three versions of the exam, with one version of this question appearing on two exams (Condition 1, 33 students) and the other version of this question appearing on the third exam (Condition 2, 16 students). For each condition, they were asked to answer the first question (Q1), using an IFAT scratch card for one of the points (Condition 1 = point A; Condition 2 = point B). With the scratch cards, they scratch their chosen answer and if they chose correctly they will see a star. If they were incorrect, they could choose a different answer and if they were correct on their second try, they received half the points. If they had to scratch a third time to find the correct answer, they received no marks. No matter how they did on the first question, they will have learned the correct answer to that question before moving on to the second question, which asked for the potential at the other point (Cond1 = point B; Cond2 = point A). The results for each condition and question are shown in Table 1.

| Q1 (scratch card question) | Q2 (follow-up question) | |

| Condition 1 | Point A: 24/33 correct = 72.7±7.8% | Point B: 28/33 correct = 84.8±6.2% |

| Condition 2 | Point B: 8/16 correct = 50.0±12.5% | Point A: 10/16 correct = 62.5±12.1% |

Table 1: Results are shown for each of the conditions. In condition 1, they answered the question for point A and received feedback, using the IFAT scratch card, before moving on to answer the question for point B. In condition 2, they first answered the question for point B using the scratch card and then moved on to answering the question for point A.

So that I can look at the improvement from all students when going from the scratch card question (Q1) to the follow-up question (Q2), I need to show that there is no statistically significant difference between how the students answered the question for point A and point B. Figure 2 shows that a two-tailed repeated-measures t-test fails to reject the null hypothesis, that the mean performance for point A and B are the same. Thus we have no evidence that these questions are different, which means we can move on to comparing how the students performed on the the follow-up question (Q2) as compared to the scratch card question (Q1).

Figure 2. A two-sided repeated-measures t-test shows that there is no statistically significant difference in performance on the question for points A and B.

Figure 3 shows a 12.2% improvement from the scratch card question (Q1) to the follow-up question (Q2). Using a one-tailed repeated-measures t-test (it was assumed that performance on Q2 would be better than Q1), the null-hypothesis is rejected at a level of p = 0.0064. Since I have made two comparisons using these same data, a Bonferroni correction should be applied. The result of this correction is there were statistically significant differences at the p = 0.05/2 = 0.025 level, which means improvement from Q1 to Q2 was statistically significant.

Figure 3. A one-sided repeated-measures t-test shows that there is a statistically significant improvement in performance on the scratch card (Q1, 65.3±6.8%) and follow-up (Q2, 77.5±6.0%) questions.

Future work

In additional to reproducing these results using multiple questions, I would also like to examine if these results hold true for some different conditions. Additional factors which could be examined include difference disciplines, upper-division vs. introductory courses and questions which target different levels of Bloom’s taxonomy.

Note: I found a paper that looks at the effect of feedback on follow-up questions as part of exam preparation and discuss it in more detail in this follow-up post.

My BCAPT Presentation on Group Quizzes

Posted: May 28, 2012 Filed under: Group Quizzes, Immediate Feedback Assessment Technique, My Presentations Leave a commentI forgot to post this. I gave a talk on group quizzes at the BCAPT AGM (local AAPT chapter) nearly a month ago. It was based on the same data analysis as a poster that I presented the previous year (two-stage group quiz posts 0 and 1), but I added some comparisons to other similar studies.

My presentation:

Download:

I’m in the middle of some data analysis for data I collected during the past year and will be presenting my initial findings at FFPER-PS 2012.

Student opinions on what contributes to their learning in my intro E&M course

Posted: March 23, 2012 Filed under: Clickers, Flipped Classroom, Group Quizzes, Homework, Immediate Feedback Assessment Technique, Inverted Classroom, Quiz Correction Assignments, Whiteboards 5 CommentsWe are a couple of weeks away from our one and only term test in my intro calc-based electricity and magnetism course. This test comes in the second last week of the course and I pitch it to them as practicing for the final. This term test is worth 10-20% of their final grade and the final exam 30-40% of their final grade and these relative weights are meant to maximize the individual student’s grade.

Today I asked them how they feel the major course components are contributing to their learning:

How much do you feel that the following course component has contributed to your learning so far in this course?

This is a bit vague, but I told them to vote according to what contributes to their understanding of the physics in this course. It doesn’t necessarily mean what makes them feel the most prepared for the term test, but if that is how they wanted to interpret it, that would be just fine.

For each component that I discuss below, I will briefly discuss how it fits into the overall course. And you should have a sense of how the whole course works by the end.

The smartphysics pre-class assignments

The pre-class assignments are the engine that allow my course structure to work they way I want it to and I have been writing about them a lot lately (see my most recent post in a longer series). My specific implementation is detailed under ‘Reading assignments and other “learning before class” assignments’ in this post. The quick and dirty explanation is that, before coming to class, my students watch multimedia prelectures that have embedded conceptual multiple-choice questions. Afterward they answer 2-4 additional conceptual multiple-choice questions where they are asked to explain the reasoning behind each of their choices. They earn marks based on putting in an honest effort to explain their reasoning as opposed to choosing the correct answer. Then they show up to class ready to build on what they learned in the pre-class assignment.

Smartphysics pre-class assignments

A) A large contribution to my learning.

B) A small contribution to my learning, so I rarely complete them.

C) A small contribution to my learning, but they are worth marks so I complete them.

D) No contribution to my learning, so I rarely complete them.

E) No contribution to my learning, but they are worth marks so I complete them

The smartphysics online homework

The homework assignments are a combination of “Interactive Examples” and multi-part end-of-chapter-style problems.

The Interactive Examples tend to be fairly long and challenging problems where the online homework system takes the student through multiple steps of qualitative and quantitative analysis to arrive at the final answer. Some students seem to like these questions and others find them frustrating because they managed to figure out 90% of the problem on their own but are forced to step through all the intermediate guiding questions to get to the bit that is giving them trouble.

The multi-part end-of-chapter-style problems require, in theory, conceptual understanding to solve. In practice, I find that a lot of the students simply number mash until the correct answer comes out the other end, and then they don’t bother to step back and try to make sure that they understand why that particular number mashing combination gave them the correct answer. The default for the system (which is the way that I have left it) is that they can have as many tries as they like for each question and are never penalized as long as they find the correct answer. This seems to have really encouraged the mindless number mashing.

This is why their response regarding the learning value of the homework really surprised me. A sufficient number of them have admitted that they usually number mash, so I would have expected them not to place so much learning value on the homework.

The online smartphysics homework/b>

A) A large contribution to my learning.

B) A small contribution to my learning, so I rarely complete them.

C) A small contribution to my learning, but it is worth marks so I complete it.

D) No contribution to my learning, so I rarely complete it.

E) No contribution to my learning, but it is worth marks so I complete it

Studying for quizzes and other review outside of class time

Studying for quizzes and other review outside of class time

A) A large contribution to my learning.

B) A small contribution to my learning, but I do it anyway.

C) A small contribution to my learning so I don’t bother.

D) No contribution to my learning so I don’t bother.

Group quizzes

I have an older post that discusses these in detail, but I will summarize here. Every Friday we have a quiz. They write the quiz individually, hand it in, and then re-write the same quiz in groups. They receive instant feedback on their group quiz answers thanks to IF-AT multiple-choice scratch-and-win sheets and receive partial marks based on how many tries it took them to find the correct answer. Marks are awarded 75% for the individual portion and 25% for the group portion OR 100% for the individual portion if that would give them the better mark.

The questions are usually conceptual and often test the exact same conceptual step needed for them to get a correct answer on one of the homework questions (but not always with the same cover story). There are usually a lot of ranking tasks, which the students do not seem to like, but I do.

Group Quizzes

A) A large contribution to my learning.

B) A small contribution to my learning.

C) They don’t contribute to my learning.

Quiz Corrections

I have an older post that discusses these in detail, but I will again summarize here. For the quiz correction assignments they are asked, for each question, to diagnose what went wrong and then to generalize their new understanding of the physics involved. If they complete these assignments in the way I have asked, they earn back half of the marks they lost (e.g. a 60% quiz grade becomes 80%).

I am delighted to see that 42% of them find that these have a large contribution to their learning. The quizzes are worth 20% of their final grade, so I would have guessed that their perceived learning value would get lost in the quest for points.

Quiz Corrections

A) A large contribution to my learning.

B) A small contribution to my learning, so I rarely complete them.

C) A small contribution to my learning, but they are worth marks so I complete them.

D) No contribution to my learning, so I rarely complete them.

E) No contribution to my learning, but they are worth marks so I complete them.

In-class stuff

I am a full-on interactive engagement guy. I use clickers, in the question-driven instruction paradigm, as the driving force behind what happens during class time. Instead of working examples at the board, I either (A) use clicker questions to step the students through the example so that they are considering for themselves each of the important steps instead of me just showing them or (B) get them to work through examples in groups on whiteboards. Although I aspire to have the students report out there solutions in a future version of the course (“board meeting”), what I usually do when they work through the example on their whiteboards is wait until the majority of the groups are mostly done and then work through the example at the board with lots of their input, often generating clicker questions as we go.

The stuff we do in class

A) A large contribution to my learning.

B) A small contribution to my learning.

C) It doesn't contribute to my learning.

The take home messages

Groups quizzes rule! The students like them. I like them. The research tells us they are effective. Everybody wins. And they only take up approximately 10 minutes each week.

I need to step it up in terms of the perceived learning value of what we do in class. That 2/3rds number is somewhere between an accurate estimate and a small overestimate of the fraction of the students in class that at any moment are actively engaged with the task at hand. This class is 50% larger than my usual intro courses (54 students in this case) and I have been doing a much poorer job than usual of circulating and engaging individual students or groups during clicker questions and whiteboarding sessions. The other 1/3 of the students are a mix of students surfing/working on stuff for other classes (which I decided was something I was not going to fight in a course this size) and students that have adopted the “wait for him to tell us the answer” mentality. Peter Newbury talked about these students in a recent post. I have lots of things in mind to improve both their perception and the actual learning value of what is happening in class. I will sit down and create a coherent plan of attack for the next round of courses.

I’m sure there are lots of other take home messages that I can pluck out of these data, but I will take make victory (group quizzes) and my needs improvement (working on the in class stuff) and look forward to continuing to work on course improvement.

Resources for selling and running an (inter)active intro physics class

Posted: August 10, 2011 Filed under: Clickers, Flipped Classroom, Group Quizzes, Introductory Mechanics, SmartPhysics, Student Textbook Reading 9 Comments This post is in response to Chad Orzel’s recent post about moving toward a more active classroom. He plans to get the students to read the textbook before coming to class, and then minimize lecture in class in favour of “in-class discussion/ problem solving/ questions/ etc.” At the end of the post he puts out a call for resources, which is where this post comes in.

This post is in response to Chad Orzel’s recent post about moving toward a more active classroom. He plans to get the students to read the textbook before coming to class, and then minimize lecture in class in favour of “in-class discussion/ problem solving/ questions/ etc.” At the end of the post he puts out a call for resources, which is where this post comes in.

There are three main things I want to discuss in this post, and (other than some links to specific clicker resources) they are all relevant to Chad or anybody else considering moving toward a more active classroom.

- Salesmanship is key. You need to generate buy-in from the students so that they truly believe that the reason you are doing all of this is so that they will learn more.

- When implementing any sort of “learn before class” strategy, you need to step back and decide what you realistically expect them to be able to learn from reading the textbook or watching the multimedia pres

entation. - The easiest first step toward a more (inter)active classroom is the appropriate use of clickers or some reasonable low-tech substitute.

Salesmanship

I also realized early on in my career that salesmanship is key. I need to explain why I want them to do the reading, and the 3 JiTT (ed. JiTT = Just-in-Time-Teaching) questions, and the homework problems sets, etc. My taking some time periodically to explain why it is all in their best interest (citing the PER studies, or showing them the correlation between homework done and exam grades), seems to help a lot with the end of term evals.

And I completely agree. I changed a lot of little things between my first and second year of teaching intro physics, but the thing that seemed to matter the most is that I managed to generate much more buy-in from the students the second year that I taught. Once they understood and believed that all the “crazy” stuff I was doing was for their benefit and was backed up by research, they followed me down all the different paths that I took them. My student evals, for basically the same course, went up significantly (0.75ish on a 5-point scale) between the first and second years.

A resource that I will point out for helping to generate student buy-in was put together for Peer Instruction (in Computer Science), but much of what is in there is applicable beyond Peer Instruction to the interactive classroom in general. Beth Simon (Lecturer at UCSD and former CWSEI STLF) made two screencasts to show/discuss how she generates student buy-in:

- Introduction to PI for Class: In this screencast Beth runs though he salesmanship slides in the same way that she does for a live class. “You don’t have to trust the monk!”

- Overview of Supporting Slides for Clickers Peer Instruction: In this screencast Beth discusses informally some of the supporting slides which discuss the reasons and value for using Peer Instruction.

Reading assignments and other “learning before class” assignments

This seems to be a topic that I have posted about many times and for which I have had many conversations. I will briefly summarize my thoughts here, while pointing interested readers to some relevant posts and conversations.

When implementing “read the text before class” or any other type of “learn before class” assignments, you have to establish what exactly you want the students to get out of these assignments. My purpose for these types of assignments is to get them familiar with the terminology and lowest-level concepts, anything beyond that is what I want to work on in class. With that purpose in mind, not every single paragraph or section of a given chapter is relevant for my students to read before coming to class. I refer to this as “textbook overhead” and Mylene discussed this as part of a great post on student preparation for class.

I have tried reading quizzes at the beginning of class and found that it was too hard to pitch them at the exact right level that most of the students that did the reading would get them and that most of the students that didn’t do the reading wouldn’t get them.

Last year I used a modified version of the reading assignment portion of Jitt (this list was originally posted here):

- Assign reading

- Give them 3 questions. These questions are either directly from the JiTT book (I like their estimation questions) or are easy clicker questions pulled from my collection. For the clicker questions I ask them explain their reasoning in addition to simply answering the question.

- Get them to submit via web-form or email

- I respond to everybody’s submissions for each question to try to help clear up any mistakes in their thinking. I use a healthy dose of copy and paste after the first few and can make it through 30ish submissions in just over an hour.

- Give them some sort of credit for each question in which they made an effortful response whether they were correct or incorrect.

I was very happy with how this worked out. I think it really helped that I always responded to each and every one of their answers, even if it was nothing more than “great explanation” for a correct answer. I generated enough buy-in to have an average completion rate of 78% on these assignments over the term in my Mechanics course last time I taught it. I typically weight these assignments at 8-10% of their final grade so they have pretty strong (external) incentive for them to do them.

As I mentioned previously, my current thinking is that I want the initial presentation (reading or screencast) that the students encounter to be one that gets them familiar with terminology and low-level or core concepts. As Mylene says “It’s crazy to expect a single book to be both a reference for the pro and an introduction for the novice.” So that leaves me in a position where I need to generate my own “first-contact” reading materials or screencasts that best suit my needs and this is something that I am going to try out in my 3rd-year Quantum Mechanics course this fall.

It turns out that for intro physics there is an option which will save me this work. I am using smartPhysics this year (disclaimer: the publisher is providing the text and online access completely free to my students for the purposes of evaluation). To explain what smartPhysics is, I will pseudo-quote from something I previously wrote:

For those teaching intro physics that are more interested in screencasting/pre-class multimedia video presentations instead of pre-class reading assignments, you might wish to take a look at SmartPhysics. It’s a package developed by the PER group at UIUC that consists of online homework, online pre-class multimedia presentations and a shorter than usual textbook (read: cheaper than usual) because there are no end-of-chapter questions in the book

, and the book’s presentation is geared more toward being a student reference since the multi-media presentations take care of the the “first time encountering a topic” level of exposition.My understanding is that they paid great attention to Mayer’s research on minimizing cognitive load during multimedia presentations. I will be using SmartPhysics for my first time this coming fall and will certainly write a post about my experience once I’m up and running.

Since writing that I have realized that the text from the textbook is more or less the transcript of the multimedia presentations so in a way this textbook actually is a reference for the pro and an introduction for the novice. They get into more challenging applications of concepts in their interactive examples which are part of the online homework assignments. For example, they don’t even mention objects landing at a different height than the launch height in the projectile motion portion of the textbook, but have an interactive example to look at this extension of projectile motion.

The thing with smartPhysics is that their checkpoint assignments are basically the same as the pre-class assignments I have been using so it should be a pretty seamless transition for me from that perspective. I still haven’t figured out how easy it is to give students direct feedback on their checkpoint assignment questions in smartPhysics, and remember that I consider that to be an important part of the student buy-in that I have managed to generate in the past.

(edit: the following discussion regarding reflective writing was added Aug 11) Another option for getting students to read the text before coming to class is reflective writing, which is promoted in Physics by Calvin Kalman (Concordia). From “Enhancing Students’ Conceptual Understanding by Engaging Science Text with Reflective Writing as a Hermeneutical Circle“, CS Kalman, Science & Education, 2010:

For each section of the textbook that a student reads, they are supposed to first read the extract very carefully trying to zero in on what they don‘t understand, and all points that they would like to be clarified during the class using underlining, highlighting and/or summarizing the textual extract. They are then told to freewrite on the extract. “Write about what it means.” Try and find out exactly what you don‘t know, and try to understand through your writing the material you don‘t know.

This writing itself is not marked since the students are doing the writing for the purposes of their own understanding. But this writing can be marked for being complete.

Clicker questions and other (inter)active physics classroom resources

Chad doesn’t mention anywhere in his post that he is thinking of using clickers, but I highly recommend using them or a suitable low-tech substitute for promoting an (inter)active class. I use a modified version of Mazur’s Peer Instruction and have blogged about my specific use of clickers in my class in the past. Many folks have implemented vanilla or modified peer instruction with cards and had great success.

Clicker question resources: My two favourite resources for intro physics clicker questions are:

- The Ohio State clicker question sequences and,

- The collections put together by the folks at Colorado.

I quite like the questions that Mazur includes in his book but find that they are too challenging for my students without appropriate scaffolding in the form of intermediate clicker questions which can be found in both the resources I list above.

Clicker-based examples: Chad expressed frustration that “when I do an example on the board, then ask them to do a similar problem themselves, they doodle aimlessly and say they don’t have any idea what to do.” To deal with this very issue, I have a continuum that I call clicker-based examples and will discuss the two most extreme cases that I use, but you can mash them together to produce anything in between:

- The easier-for-students case is that, when doing an example or derivation, I do most of the work but get THEM to make the important mental jumps. For a typical example, I will identify 2-4 points in the example that would cause them some grief if they tried to do the example completely on their own. When I work this example at the board (or on my tablet) I will work through the example as usual, but when I get to one of the “grief” points I will pose a clicker question. These clicker questions might be things like “which free-body diagram is correct?”, “which of the following terms cancel?” or “which reasoning allowed me to go from step 3 to step 4?”

- The other end of the spectrum is that I give them a harder question and still identify the “grief” points. But I instead get them to do all the work in small groups on whiteboards. I then help them through the question by posing the clicker questions at the appropriate times as they work through the problems. Sometimes I put all the clicker questions up at the beginning so they have an idea of the roadmap of working through the problem.

An excellent resource for questions to use in this way is Randy Knight’s 5 Easy Lessons, which is a supercharged instructor’s guide to his calculus-based intro book. The first time I used a lot of these questions I found that the students often threw their hands up in the air in confusion. So I would wander around the room (36 students) and note the points at which the students were stuck and generate on-the-fly clicker questions. The next year I was able to take advantage of those questions I had generated the previous year and then had all the “grief” points mapped out and the clicker questions prepared for my clicker-based examples.

Group Quizzes

Not related to clicker questions, but they are related to the (inter)active class: group quizzes are something that I have previously posted about and I have also presented a poster on the topic. I give the students a weekly quiz that they write individually first, and then after they have all been handed in they re-write the quiz in groups. Check out the post that I linked to if you want to learn more about exactly how I implement these as well as the pros and cons. Know that they are my single favourite thing that happens in my class due to it being the most animated I get to see the students being while discussing the application of physics concepts. It is loud and wonderful and I am trying to figure out how to show that there is a quantifiable learning benefit.

Two-Stage Group Quizzes Part 0: Poster Presentation from FFPERPS 2011

Posted: April 9, 2011 Filed under: Global Physics Department, Group Quizzes, Immediate Feedback Assessment Technique 3 CommentsThis is Part 0 because I am just posting a link to the poster, as I presented it at FFPERPS 2011 (Foundations and Frontiers of Physics Education Research: Puget Sound):

Part 1 of this planned series of posts is where I go into some detail about the what, how and why (the intro section of the poster).

I am scheduled to present on this topic at the forthcoming April 13, 2011 Global Physics Department meeting, which takes place at 9:30 EDT. Please come join us in our elluminate session if you are interested (the more the merrier). We also have a posterous if you’re interested.

Two-Stage Group Quizzes Part 1: What, How and Why

Posted: March 23, 2011 Filed under: Group Quizzes, Immediate Feedback Assessment Technique 22 Comments

Note: This is the first in a series of posts, and is based on my fleshing out in more detail a poster that I presented at the fantastic FFPERPS conference last week. The basic points of group exam pros and cons, and the related references borrow very heavily from Ref. 5 (thanks Brett!). All quoted feedback is from my students.

Introduction

A two-stage group exam is form of assessment where students learn as part of the assessment. The idea is that the students write an exam individually, hand in their individual exams, and then re-write the same or similar exam in groups, where learning, volume and even fun are all had.

Instead of doing this for exams, I used the two-stage format for my weekly 20-30 minute quizzes. These quizzes would take place on the same day that the weekly homework was due (Mastering Physics so they had the instant feedback there as well), with the homework being do the moment class started. I used them for the first time Winter 2011 in an Introductory Calculus-based E&M course with an enrolment of 37 students.

The format of the weekly quiz (20-30 minutes total) was as follows:

- The students wrote their 3-5 multiple-choice and short-answer question quiz individually and afterward handed these solo quizzes in.

- The group quiz (typical group size = 3) consisted of most or all of individual questions as multiple-choice questions. Most questions had five choices.

- The marks were weighted 75% for individual component and 25% for group component. The group component was not counted if it would drop an individual’s mark .

Immediate Feedback Assessment Technique (IF-AT)

The group quizzes were administered using IF-AT [1] cards, with correct answers being indicated by a star when covering is scratched off. This immediate feedback meant that the students always knew the correct answer by the end of the quiz, which was a time at which they were at their most curious, and thus the correct answer should be most meaningful to them. Typically, students that did not understand why a given answer was correct would call me over and ask for an explanation.

“I feel finding out the answers immediately after the quiz helps clarify where I went wrong instead of letting me think my answer was right over the weekend. It also works the same for right answers.”

My students found it to be a very fair marking system because they received partial marks based on their number of incorrect answers. My system was 2 points if you got it correct on the first try, and that went down by half for each wrong answer. So 1 wrong answer = 1 point credit, 2 wrong answers = 0.5 point credit, and so on.

The General Benefits of Group Exams

Peer interactions and feedback: Both students benefit when one explains an answer to the other.

“When I think I know what’s going on I can explain it and realize yes, I really do know what I’m talking about…and sometimes vice-versa.”

All students are given a good chance to participate: All students, including the shy or quiet, participate and are given a chance to explain their understanding and reasoning. I gave nine of these two-stage quizzes over the term and don’t remember seeing any students sitting on the sidelines letting the rest of their group figure it out. They were invested because their marks were on the line, and they genuinely (based on feedback) seemed to feel like it was a good opportunity for learning.

Development of collaboration skills [2]: I can’t really comment on this too much. These are not skills which I was able to notice a difference over the course of the term, but I would certainly believe that many students had some tangible level of development of these types of skills.

Students enjoy them and there is an increase in overall enjoyment of course [2]: The claim about the increase in overall enjoyment from Ref. 2 is not something I can comment on since I changed many things in this course from the first time I taught it so couldn’t pinpoint one thing which lead to higher student evaluations and a more positive classroom culture than the first time I taught this course (note this was only my second time teaching this course). But I can certainly tell you the feedback I got regarding the group quizzes was overwhelmingly positive.

“I (heart) group quizzes!!! I always learn, and it’s nice to argue out your thinking with the group. It really helps me understand. Plus, scratching the boxes is super fun.”

They promote higher-level thinking [3]: This claim from Ref. 3 is another one for which I cannot say I looked for, nor saw any evidence.

Increase in student retention of information (maybe) [4]: This is at the heart of a study which I plan to run this coming year. Ref. 4 saw a slight increase in student retention of information on questions for which students completed the group quizzes. But this study did not control for differences in time-on-task between the students that completed just the individual exams and those that complete individual and group exams. More discussion on my planned study in a future post.

Improved student learning [3,5]: I saw the same sort of evidence for this that is shown in Ref. 5. Groups of students, where none of them had the correct answer for a question on their solo quizzes, were able to get the correct answer on the group quiz nearly half the time. This shift from nobody having the correct answer to the group figuring out the correct answer is a very nice sign of learning.

Some Specific Benefits I Saw in My Implementation

The feedback comes when it is most useful to them: Immediate feedback via IF-AT provides them with correct answer when they are very receptive, after having spent time on their own and in group discussion pondering the question.

“It’s a good time to discuss and it’s the perfect time to learn, ‘cause right after the quiz, the ideas and thoughts stick to your mind. It’s gonna leave a great impression.”

Very high engagement from all students: I observed my students to be highly engaged with the questions at hand and were very excited (high-fives and other celebrations were delightfully common) when they got a challenging question correct.

Reduced student anxiety: due to (a) knowing that they could earn marks even if they were incorrect on individual portion, and (b) knowing that they would come away from the quiz knowing the correct answers. Point (a) is pure speculation on my part. Part (b) was a point made by multiple students when I asked them to provide feedback on the group quiz process.

Some Drawbacks to Group Exams

Longer exam/quiz time: This really wasn’t a big deal. It was typically less than an extra 10 minutes and the advantages were far too great to not give up that extra little bit of class time.

Some students feel very anxious about group work and interactions: This never came up in the feedback I received from the students, but I have friends and family who have discussed with me how much they dislike group work. Perhaps this specific implementation might have even been to their liking.

Social loafers and non-contributors earn same marks as the rest of the group: To my mind the potential student frustration from this was greatly moderated by all students writing the solo quizzes, as well as the group portion being worth only 25% of total quiz mark. And as I mentioned earlier, I do not remember noticing a non-contributor even once over the term.

Dominant group members can lead group astray when incorrect: This is another thing which, to my mind, the IF-AT sheets moderate greatly. Dominant group members can potentially minimize the contributions of other group members, but I do remember an “ease-your-mind” about dominant student issues point made by Jim Sibley when I first learned of IF-AT. Jim Sibley is at the University of British Columbia and is a proponent of Team-Based Learning. In this learning environment they use the IF-AT cards for reading quizzes at the start of a module. He told us that groups often go to the shy or quiet members as trusted answer sources when dominant group members are repeatedly incorrect.

References

[1] http://www.epsteineducation.com

[2] Stearns, S. (1996). Collaborative Exams as Learning Tools. College Teaching, 44, 111–112.

[3] Yuretich, R., Khan, S. & Leckie, R. (2001). Active-learning methods to improve student performance and scientific interest in a large introductory oceanography course. Journal of Geoscience Education, 49, 111–119.

[4] Cortright, R.N., Collins, H.L., Rodenbaugh D.W. & DiCarlo, S.T. (2003). Student retention of course content is improved by collaborative-group testing, Advan. Physiol. Edu. 27: 102-108.

[5]Gilley, B. & Harris, S. (2010). Group quizzes as a learning experience in an introductory lab, Poster presented at Geological Society of America 2010 Annual Meeting.

Updates

March 26, 2011 – Added: “These quizzes would take place on the same day that the weekly homework was due (Mastering Physics so they had the instant feedback there as well), with the homework being do the moment class started.”

Recent Comments