Workshop slides for Gilley & Ives “Two Stage Exams: Learning Together?”

Posted: May 4, 2017 Filed under: Group Quizzes Leave a commentToday Brett Gilley and I ran a workshop “Two Stage Exams: Learning Together?” at the University of British Columbia Okanagan Learning Conference. Great fun was had and many ideas exchanged. Slides below.

Gilley Ives – Two Stage Exam Workshop – UBCO Learning Conference – 2017-05-04

Learning Catalytics workflow

Posted: October 14, 2014 Filed under: Clickers, Learning Catalytics, Uncategorized 10 CommentsDisclosure: my colleague, Georg Rieger, and I are currently in the process of securing post-doc funding to evaluate the effectiveness of Learning Catalytics and that position would be paid in part by Pearson, who owns Learning Catalytics.

A whole lotta devices!

I have been using Learning Catalytics, web-based “clickers on steroids” software, in a lecture course and a lab course since the start of September. In this post I want to focus on the logistical side of working with Learning Catalytics in comparison to clickers, and just touch briefly on the pedagogical benefits.

I will briefly summarize my overall pros and cons of with using Learning Catalytics before diving into the logistical details:

- Pro: Learning Catalytics enables a lot of types of questions that are not practical to implement using clickers. We have used word clouds, drawing questions (FBDs and directions mostly), numerical response, choose all that apply, and ranking questions. Although all of these question types, aside from the word clouds, are possible as multiple-choice if you have or are able to come up with good distractors, Learning Catalytics lets you collect their actual answers instead of having them give you best guess from a selection of answers that you give to them.

- Con: Learning Catalytics is clunky. The bulk of this post will discuss these issues, but Learning Catalytics has larger hardware requirements, relies heavily on good wifi and website performance, is more fiddly to run as an instructor, and is less time-efficient than just using clickers (in the same way that using clickers is less time-efficient than using coloured cards).

- Pro: The Learning Catalytics group tool engages reluctant participants in a way that no amount of buy-in or running around the classroom trying to get students to talk to each other seems to be able to do. When you do the group revote potion of Peer Instruction (the “turn to your neighbor” part), Learning Catalytics tells the students exactly who to talk to (talk to Jane Doe, sitting your your right) and matches them up with somebody that answered differently than them. Although this should not be any different than instructing them to “find somebody nearby that answered differently than you did and convince them that you are correct,” there ends up being a huge difference in practice in how quickly they start these discussions and what fraction of the room seems to engage in these discussions.

Honestly, the first two points make it so that I would favour clickers a bit, but the difference in the level of engagement thanks to the group tool is the thing that has sold me on Learning Catalytics. Onto the logistical details.

Hardware setup

As you can see from the picture, I use a lot of devices when I teach with Learning Catalytics. You can get away with fewer devices, but this is the solution that meets my needs. I have tried some different iterations, and what I describe here is the one that I have settled on.

- In the middle you will find my laptop, which runs my main slide deck and is permanently projected on one of the two screens in the room. It has a bamboo writing tablet attached to it to mark up slides live and will likely be replaced by Surface Pro 3 in the very near future.

- At the bottom is my tablet (iPad), which I use to run the instructor version of Learning Catalytics. This is where I start and stop polls, choose when and how to display results to the students and other such instructorly tasks. The screen is never shared with the students and is analogous to the instructor remote with little receiver+display box that I use with iclickers. Since it accesses Learning Catalytics over wifi and is not projected anywhere, I can wander around the room with it in my hand and monitor the polls while talking to students. Very handy! I have also tried to do this from my smartphone when my tablet battery was dead, but the instructor UI is nowhere near as good for the smartphone as it is for larger tablets or regular web browsers.

- At the top is a built-in PC which I use to run the student version of Learning Catalytics. This displays the Learning Catalytics content that students are seeing on their devices at any moment. I want to have this projected for two reasons. First, I like to stomp around and point at things when I am teaching so I want the question currently being discussed or result currently being displayed to be something that I can point at and focus their attention on instead of it just being on the screens of their devices. Second, I need the feedback of what the students see at any moment to make sure that the question or result that I intended to push to their devices has actually been pushed to their devices. For the second point, it is reasonable to flip back and forth between instructor and student view on the device running Learning Catalytics (this is what one of my colleagues does successfully), but I find that a bit clunky and it still doesn’t meet my stomping around and pointing at stuff need. The instructor version of Learning Catalytics pops up a student view and this is what I use here (so technically I am logged in as an instructor on two devices at once). The student view that pops up with the instructor version makes better use of the projected screen real estate (e.g., results are shown along the side instead of at the bottom) than the student version that one gets when logging in using a student account.

Other logistics

The trade-off when going from clickers to Learning Catalytics is that you gain a bunch of additional functionality, but in order to do so you need to take on a somewhat clunky and less time-efficient system. There are additional issues that may not be obvious from just the hardware setup described above.

- I am using 3 computer-type devices instead of a computer and clicker base. Launching Learning Catalytics on a device takes only a bit longer than plugging in my iclicker base and starting the session, but this is still one or two more devices to get going (again, my choice and preference to have this student view). Given the small amount of of time that we typically have between gaining access to a room and the time at which we start a class, each extra step in this process introduces another possible delay in starting class on time. With 10 minutes, I find I am often cutting it very close and sometimes not ready quite on time. In two of approximately twelve lectures where I intended to use Learning Catalytics this term, there was a wifi or Learning Catalytics website problem. Once I just switched to clickers (they have them for their next course) and the other time the problem resolved quickly enough that it just cost us a bit of time. When I remember to do so, I can save myself a bit of time by starting the session on my tablet before I leave my office.

- The workflow of running a Learning Catalytics question is very similar to running a clicker question, but after six weeks of using Learning Catalytics, clickers feel like they have a decent-sized advantage in the “it just works” category. There are many more choices with the Learning Catalytics software, and with that a loss of simplicity. Since I did have the experience a few weeks ago of using clickers instead of Learning Catalytics, I can say that the “it just works” aspect of the clickers was reinforced.

- Overall, running a typical Learning Catalytics question feels less time-efficient than a clicker question. It takes slightly longer to start the question, for them to answer and then to display the results. This becomes amplified slightly because many of the questions we are using require the students to have more complicated interactions with the question than just picking one of five answers. All that being said, my lecture TA and I noted last week that it felt like we finally got to a point where running a multiple-choice question in Learning Catalytics felt very similar in time from beginning to end as with clickers. To get to this point, I have had to push the pace quite a bit with these questions, starting my “closing the poll” countdown when barely more than half of the answers are in. So I think I can run multiple choice questions with similar efficiency on both systems now, but I am having to actively force the timing in the case of Learning Catalytics. However, having to force the timing may be a characteristic of the students in the course more than the platform.

- Batteries! Use of Learning Catalytics demands that everybody has a sufficiently charged device or ability to plug their device in, including the instructor. This seems a bit problematic if students are taking multiple courses using the system in rooms where charging is not convenient.

- Preparing for class also has additional overhead. We have been preparing the lecture slides in the same way as usual and then porting any questions we are using from the slides into Learning Catalytics. This process is fairly quick, but still adds time to the course preparation process. Where it can become a bit annoying, is that sometimes the slide and Learning Catalytics versions of the question aren’t identical due to a typo or modification that was made on one platform, but accidentally not on the other There haven’t been a ton of these, but it is one more piece that makes using Learning Catalytics a bit clunky.

- In its current incarnation, it seems like one could use Learning Catalytics to deliver all the slides for a course, not just the questions. This would be non-ideal for me because I like to ink up my slides while I am teaching, but this would allow one to get rid of the need for a device that was projecting the normal slide deck.

In closing

An instructor needs to be willing to take on a lot of overhead, inside the class and out, if they want to use Learning Catalytics. For courses where many of the students are reluctant to engage enthusiastically with the peer discussion part of the Peer Instruction cycle, the group tool functionality can make a large improvement in that level of engagement. The additional question types are nice to have, but feel like they are not the make or break feature of the system.

I finally got to meet my students from the international college

Posted: August 22, 2014 Filed under: Uncategorized | Tags: Vantage College 8 CommentsLast week was a historic time for us at Vantage College (the International first-year transfer College that gets 2/3 to 3/4 of my time depending on how you count it or perhaps who you ask). Our very first students evar arrived. For the past week, they have been participating in a 1500ish-student orientation program for international and aboriginal students on campus. I have been the faculty fellow for a group of 20ish students, and in addition to our scheduled activities, I have been going out of my way to join them for lunch (which sometimes involves taking selfies with the students).

As a quick reminder, Vantage is one-year residential college at UBC for international students that had incoming English scores a bit too low for direct entry. I am teaching our enriched physics course to these students and all of the courses in the college have additional language support in addition to the regular course support. Those that are successful in the program will be able to transfer into their second year of various programs and complete potentially complete their degree in four years. Although I am regular right-before-the-term-starts busy, I want to quickly reflect on things…

- Although it wasn’t obvious to me at first, one of the reasons I am really excited about this is that it is a cohort-based program, where our science courses max out at 75 seats. I am really looking forward to building relationships with the students over this time and then hopefully getting to see them continue on to be amazingly successful UBC students.

- Our teaching team and support staff might just be the most fantastically talented group of people on campus from a per-capita-awesomeness standpoint. And we (the physics team) have been poaching some of the best TAs in our department to work with us. Our leadership team is supportive, just as excited as the rest of us and seem to manage that magical combination of having both the students’ and instructors’ best interests in mind.

- The average level of conversational English of the students I have met so far has been much higher than I was expecting. The conversations may be slow and involve some repetition, but I have been able to have lots of genuine conversations.

- The students are excited! We’re excited!

- It has been really fun discovering how many cultural references and touchstones I take for granted. I was with a group of students when a Star Wars reference (our computer science department has a wing called “x-wing”) came up. Not that I was expecting them to get the reference, but I said something to the effect of “haha, x-wing, that’s a thing from Star Wars” and then realized that most of them didn’t even know what Star Wars was.

- Designing a program from scratch has been a great experience. In the end our Physics courses are frighteningly similar to what my small first year courses looked like at UFV, but we got there through a lot of discussions, weighing options, etc

So meeting the students has turned all of this abstract planning quite real and what used to be the future into the present. I am so delighted to have met so many of the students with whom I’m going to spend the next 10 months.

AAPTSM14/PERC2014: Measuring the Learning from Two-Stage Collaborative Group Exams

Posted: August 3, 2014 Filed under: Group Quizzes 4 CommentsIn an attempt to get back into semi-regular blogging, I am setting aside my pile have half-written posts and am going to share the work that I presented at the 2014 AAPT Summer Meeting and PERC 2014.

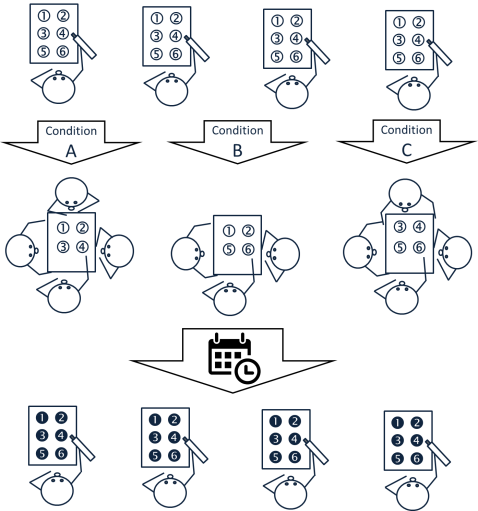

The quick and dirty version is that I was able to run a study, looking at the effectiveness of group exams, in a three-section course which enrolled nearly 800 students. The image below summarizes the study design, which was repeated for each of the two midterms.

(First row) The students all take/sit/write their exams individually. (Second row) After all the individual exams have been collected, they self-organize into collaborative groups of 3-4. There were three different versions of the group exam (conditions A, B and C), each having a different subset of the questions from the individual exam. It was designed so that, for each question (1-6), 1/3 of the groups would not see that question on their group exam (control) and the other 2/3rds would (treatment). (Bottom row) They were retested on the end-of-term diagnostic using questions which matched, in terms of application of concept, the original 6 questions from the midterm.

Results: As previously mentioned, I went through this cycle for each of the two midterms. For midterm 2, which took place only 1-2 weeks prior to the end-of-term diagnostic, students that saw a given question on their group exam outperformed those that did not on the matched questions from the end-of-term diagnostic. Huzzah! However, for the end-of-term diagnostic questions matched with the midterm 1 questions, which took place 6-7 weeks prior to the end-of-term diagnostic, there were no statistically significant differences between those that saw the matched questions on their group exams vs. not. The most likely explanation is that the learning from both control and treatment decays over time, thus so does the difference between these groups. After 1-2 weeks, there is still a statistically significant difference, but after 6-7 weeks there is not. It could also be differences in the questions associated with midterm 1 vs midterm 2. For some of the questions, it is possible that the concepts were not well separated enough within the questions so group exam discussions may have helped them improve their learning of concepts for questions that weren’t on their particular group exam. I hope to address these possibilities in a study this upcoming academic year.

Will I abandon this pedagogy? Nope. The group exams may not provide a measurable learning effect which lasts all the way to the end of the term for early topics, but I am more than fine with that. There is a short-term learning effect and the affective benefits of the group exams are extremely important:

- One of the big ones is that these group exams are effectively Peer Instruction in an exam situation. Since we use Peer Instruction in this course, this means that the assessment and the lecture generate buy-in for each other.

- There are a number of affective benefits, such as increased motivation to study, increased enjoyment of the class, and lower failure rates, which have been shown in previous studies (see arXiv link to my PERC paper for more on this). Despite my study design, which had the students encountering different subsets of the original question on their group exams, all students participated in the same intervention from the perspective of the affective benefits.

I had some great conversations with old friends, new friends and colleagues. I hope to expand on some of the above based on these conversations and feedback from the referees on the PERC paper, but that will be for another post.

Pre-class homework completion rates in my first-year courses

Posted: November 18, 2013 Filed under: Flipped Classroom 9 CommentsIn my mind it is hard to get students to do pre-class homework (“pre-reading”) with much more than an 80% completion rate when averaged out over the term. It usually starts higher than this, but there is a slow trend toward less completion as the term wears on. After taking a more careful look at the five introductory courses in which I used pre-class assignment I have discovered that I was able to do much better than 80% in some of the later courses and want to share my data.

Descriptions of the five courses

The table below summarizes some of the key differences between each of the five introductory physics courses in which I used pre-class assignments. It may also be important to note that the majority of the students in Jan 2010 were the same students from Sep 2009, but not much more than half of the Jan 2013 students took my Sep 2012 course. For Jan 2013 only two of the students had previously taken a course with me.

| Course | Textbook | Contribution to overall course grade | Median completion rate (the numbers in brackets show the 1st and 3rd quartiles) |

| Sep 2009 (Mechanics) | Young & Freedman – University Physics 11e | Worth 8%, but drop 3 worst assignments. No opportunities for late submission or earning back lost marks. | 0.73 (0.62,0.79) |

| Jan 2010 (E&M) | Young & Freedman – University Physics 11e | Worth 10%, but drop 2 worst assignments. No opportunities for late submission or earning back lost marks. | 0.78 (0.74,0.89) |

| Sep 2011 (Mechanics) | smartPhysics | Worth 8%. Did not drop any assignments, but they could (re)submit at any point up until the final exam and earn half marks. | 0.98 (0.96,0.98) |

| Jan 2012 (E&M) | smartPhysics | Worth 8%. Did not drop any assignments, but they could (re)submit at any point up until the final exam and earn half marks. | 0.94 (0.93,0.98) |

| Jan 2013 (E&M) | Halliday, Resnick & Walker – Fundamentals of Physics 9e & smartPhysics multimedia presentations | Worth 10%. Did not drop any assignments, but they could (re)submit at any point up until the final exam and earn half marks. | 0.93 (0.87,0.97) |

Overall the style of question used was the same for each course, with the most common type of question being a fairly straight-forward clicker question (I discuss the resources and assignments a bit more in the next paragraph). I have not crunched the numbers, but scanning through results from the Jan 2013 course shows that students are answering the questions correctly somewhere in the 65-90% range and the questions used in that course were a mishmash of the Jan 2010 and Jan 2012 courses. Every question would have an “explain your answer” part. These assignments were graded on completion only, but their explanation had to show a reasonable level of effort to earn these completion marks. Depending on class size, I did not always read their explanations in detail, but always scanned every answer. For the first couple of assignments I always made sure to send some feedback to each student which would include an explanation of the correct answer if they answered incorrectly. Each question would also be discussed in class.

A rundown of how the resources and assignments varied by class:

- For Sept 2009 and Jan 2010 I used a Blackboard assignment to give them the three questions each week and told them which sections of the textbook to read, and I didn’t do much to tell them to skip passages or examples that weren’t directly relevant.

- For Sept 2010 and Jan 2012 I used smartPhysics (link to UIUC PER group page, where they were developed). These consist of multimedia presentations for each chapter/major topic, which have embedded conceptual questions (no student explanations required for these). After they are done the multimedia presentation, they then answer the actual pre-class questions, which are different from those embedded in the multimedia presentation. For the most part, the questions in their pre-class assignments were similar to the ones I was previously using except for the smartPhysics ones were often more difficult. Additionally, my one major criticism of smartPhysics is that I don’t feel they are pitched at the appropriate level for a student encountering the material for the first time. For more on this, have a look at the second bullet in the “Random Notes” section of this post I did on pre-class assignments (link). One of the very nice things about smartphysics is that everything (the regular homework, the pre-class assignments and the multimedia presentations) all used the same web system.

- For January 2013, I was back on assigning the pre-class assignments through Blackboard. The preamble for each of the pre-class assignments pointed them toward a smartPhysics multimedia presentation and the relevant sections of the textbook we were using. Students could use one, the other or both of these resources as they felt fit. I don’t think I ever surveyed them on their use of one over the other, but anecdotally I had the sense that way more were using the multimedia presentations.

The data

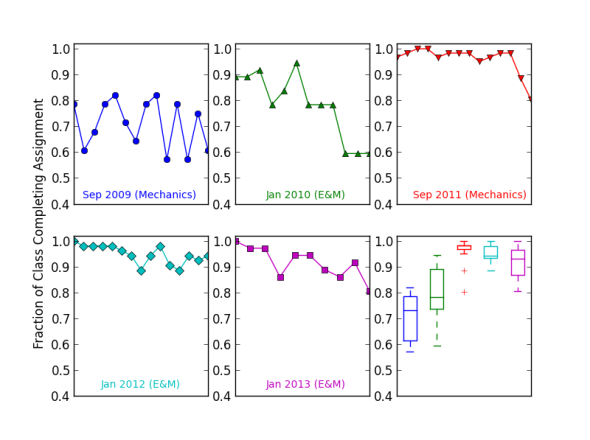

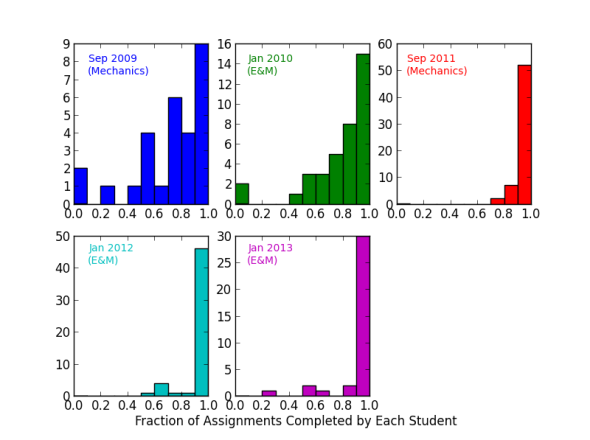

I present two graphs showing the same data from different perspectives. Figure 1 shows how the fraction of the class completing a given pre-class assignment varies over the course of the term. There is a noticeable downward trend in each course. Figure 2 shows the fraction of assignments completed by each student in each class.

Figure 1. For the first five graphs, the x-axis represents time going from the start of the course (left side) to the end of the course (right side). The box and whiskers plot compares the five courses according to the previously used colours, where the line in the boxes shows the median and the boxes show the 1st and 3rd quartiles. The whiskers are the matplotlib default; they extend to the most extreme data point within the 1.5*(75%-25%) range.

Figure 2. Histograms showing the fraction of assignments completed by each student. An ambiguous looking artifact appears in the 0 bin for Sept 2011, but all students in that course completed 70% or more of the pre-class assignments

Discussion

There is clearly a large difference between the first two courses and the final three in terms of the rates at which students were completing these pre-class assignments. The fact that I saw 98% of these assignments completed one term is still shocking to me. I’m not sure how much each of the following factors contributed to the changes, but here are some of the potential factors…

- Multimedia presentations – students seem to find these easier to consume than reading the textbook. There is a study [Phys. Rev. ST Physics Ed. Research 8, 010103 (2012)] from

Homeyra Sadaghiani at California State Polytechnic University where she did a controlled study comparing the multimedia presentations to readings in textbooks, and used the same pre-class assignments for both. In addition to finding that the multimedia presentation group did slightly better on the exams, she also found that the students had a favorable attitude toward the usefulness of the multimedia presentations, but that the textbook group had an unfavorable attitude toward the textbook reading assignments. But she also mentions that the multimedia group had a more favorable attitude toward clicker questions than the textbook section, and this alone could explain the difference in test performance as opposed to it having to do with the learning which takes place as part of the pre-class assignments. If the students in one section are buying into how the course is being run more than another, they are going to do a better job of engaging with all of the learning opportunities and as a result should be learning more. There are a variety of reasons why reading the textbook may be preferred to have them watching a video or multimedia presentation, but you can’t argue with the participation results. - Generating buy-in – I have certainly found that, as time wears on, I have gotten better at generating buy-in for the way that my courses are run. I have gotten better at following up on the pre-class assignments in class and weaving the trends from their submissions into the class. However, for the Sep 2009 and Jan 2010 courses, that was the most personal feedback I have ever sent to students in an intro course on their pre-class assignments so I might have expected that getting better at generating buy-in might cancel out the decreased personal feedback.

- Changes in grading system – This may be a very large one and is tied to generating buy-in. For the first two courses I allowed them to drop their worst 3 or 2 pre-class assignments from their overall grade. In the later courses, I changed the system to being one where they could even submit the assignments late for half credit, but were not allowed to drop any. In the latter method I am clearly communicating to the students I think it is worth their time to complete all of the assignments.

In poking around through the UIUC papers and those from Sadaghiani, that 98% completion rate from my Sept 2011 course is really high, but is going to be an overestimate of how many people actually engaged with the pre-class material as opposed to trying to bluff their way through it. The smartPhysics system also gave them credit for completing the questions embedded in the multimedia presentations and I’m not presenting those numbers here, but when I scan the gradebooks, those that received credit for doing their pre-class assignments also always received credit for completing the embedded questions in the multimedia presentations. But, it is possible to skip slides to get to those so that doesn’t mean they actually fully consumed those presentations. Based on reviewing their explanations each week (with different degrees of thoroughness) and then docking grades accordingly, I would estimate that maybe 1 or 2 students managed to bluff their way through each week without actually consuming the presentation. That translates to 2-3% of these pre-class assignments.

Sadaghiani reported “78% of the MLM students completed 75% or more of the MLMs”, where MLM is what I have been calling the multimedia presentations. Colleagues of mine at UBC found (link to poster) that students self-reported to read their textbooks regularly in a course that used a quiz-based pre-class assignment (meaning that students were given marks for being correct as opposed to just participating, and in this case were not asked to explain their reasoning). 97% of the students actually took the weekly quizzes, but there is a discrepancy in numbers between those that took the quizzes and those that actually did the preparation.

With everything I have discussed here in mind, it seems that 80% or better is a good rule of thumb number for buy-in for pre-class activities, and that one can do even better than that with some additional effort.

Student collision mini-projects from my summer 2013 comp-phys course

Posted: October 3, 2013 Filed under: Computational Physics 2 CommentsThe last course that I taught at UFV before taking a job at UBC was an online Computation Physics course. I previously posted the mini-project videos from when I ran the course in the fall and you can check that previous post to learn more about the context of these mini-projects. The overall level of creativity seems like it was a bit lower this time than last and that might be in part due to the online nature of the course, where it was in person the last time the course ran. Last time, people would show up to the computer lab and see what others were working on and the stakes would become raised. I think if I did this type of course online again, I would get people to submit regular progress videos so that there was the virtual equivalent of people showing up in the lab and feeling the stakes be raised.

Mathematica vs. Python in my Computational Physics course

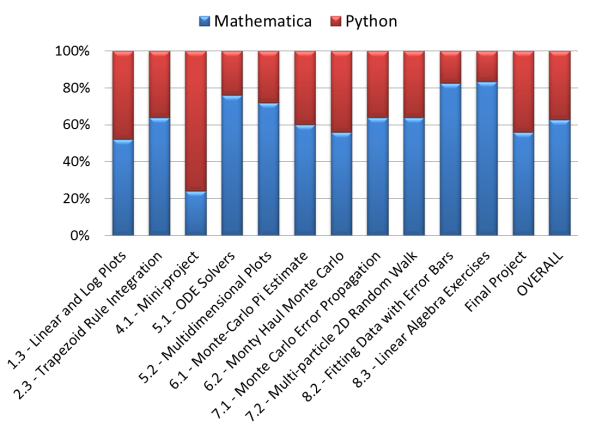

Posted: September 26, 2013 Filed under: Computational Physics 4 CommentsThis past year I taught two sections (fall and summer) of Computational Physics at UFV, which is quite a rare thing to happen at a school where we typically run 3rd and 4th year courses every other year. The course was structured so that students would get some initial exposure to both Python and Mathematica for the purposes of physics-related computational tasks, and then as the course went on they would be given more and more opportunities to choose between the two platforms when completing a task. This post looks at the choices made by the students on a per task basis and a per student basis. From a total of 297 choices, they chose Mathematica 62.6 ± 2.8% (standard error) of the time. Two things to note. First, there is a small additional bias toward Mathematica because two of the tasks are more Mathematica-friendly and only one of the tasks is more Python-friendly, as will be discussed. Second, there were students coming into the course with some previous experience using Mathematica or a strong computing background in Java or C++ and those students usually gravitated toward Mathematica or Python, respectively. But some students did the opposite and took advantage of the opportunity to become much more familiar with a new-to-them platform and tried to do as much of their work as possible using the new platform.

Task by task comparison

The general format of the course is that, for the first three weeks, the students are given very similar tasks that they must complete using Python and Mathematica. Examples include importing data and manipulating the resulting arrays/lists to do some plotting, and basic iterative (for/do) loops. Each of those weeks there is a third task (1.3 and 2.3 in Figure 1), where they build on those initial tasks and can choose to use either Python or Mathematica. In subsequent weeks, being forced to use both platforms goes away and they can choose which platform to use.

Figure 1 shows their choices for those tasks in which they could choose. In week 3 they learn how to do animations using the Euler-Kromer method in Mathematica and using VPython. Although Mathematica works wonders with animations when using NDSolve, it is really clunky for Euler-Kromer-based animations. Task 4.1 is a mini-project (examples) where they animate a collision and since they have only learned how to do this type of thing using Euler-Kromer at this point, VPython is the much friendlier platform to use for the mini-project. But as you can see in the third column in Figure 1, some students were already quite set on using Mathematica. Conversely, for tasks 8.2 and 8.3, Mathematica is the much more pleasant platform to be using and you can see that only a few diehards stuck to Python for those ones.

Figure 1. Comparison between Mathematica and Python usage by task. Each column represents submissions from approximately 25 students (typically 23) or student groups (typically 2). There is some variation in the total counts from column to column due to non-submissions. Final projects were done individually, but for each of the two groups, members made the same choice with regards to platform for their final projects.

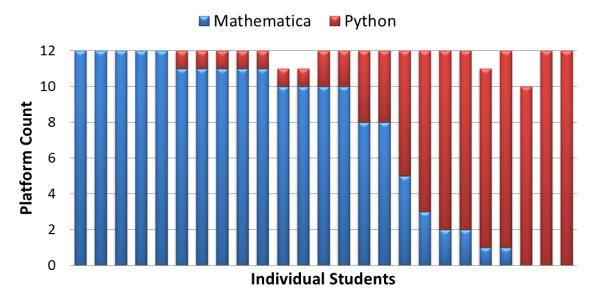

Student by student comparison

Figure 2 shows the breakdown of these choices by student or group. For those seven students that only used Python by choice once, it was for the mini-project. And the two students that only used Mathematica once used it for the Linear Algebra exercises.

Figure2. Comparison of Mathematica and Python usage on a student by student (or group by group) basis. They have been put in order from maximum Mathematica usage to maximum Python usage. Variation in the total counts from column to column is due to non-submissions. Final projects were done individually, but for each of the two groups, members made the same choice with regards to platform for their final projects.

Student feedback

After the course was completed, I asked the students for some feedback on various aspects of the course and one of the questions I asked was

How did you find that the mix of Mathematica and Python worked in this course?

Approximately half of the students submitted feedback and their responses were as follows

I would have preferred more emphasis on Mathematica 1 14% I would have preferred more emphasis on Python 0 0% The mix worked well 6 86%

And their comments

- Kinda hard to choose one of the above choices. I felt like I wish I could’ve done more stuff in python but I was happy with how proficient I became at using Mathematica. So I think the mix worked well, though I kinda wish I explored python a bit more.

- I think it was very valuable to see the strengths and weaknesses of both Mathematica and Python as well as to get familiar with both of them.

- It was cool to see things done in two languages. I’m sure that people less enthusiastic about programming would probably have preferred one language, but I thought it was good.

- Doing both to start followed by allowing us to pick a preferred system was great.

In closing

I ended up being quite happy with how the mix worked. I learned a ton in the process because I came in being only mildly proficient with each platform. I was also really happy to have the students come out of the course seeing Python as a viable alternative to Mathematica for a wide range of scientific computing tasks, and conversely getting to see some places where Mathematica really excels.

75 vs. 150

Posted: September 16, 2013 Filed under: Vantage college 5 CommentsAs previously mentioned, a significant component of my new job at UBC this year is curriculum design for a first year cohort that will be taking, among other things, Physics, Calculus and Chemistry, and will have English language instruction somehow embedded into the courses or the support pieces around the courses. These students are mostly prospective Math, Physics and Chemistry majors. An interesting discussion we are having right now relates to class size; specifically class sizes of 75 versus 150.

To me, there are two main possible models for the physics courses in this program:

- Run them similar to our other lecture-hall first-year courses, where clickers and worksheets make up a decent portion of the time spent in class. In this case, 75 vs. 150 is mostly the difference in how personal the experience is for the students. Based on my own experience, I feel like 75 students is the maximum number where an instructor putting in the effort will be able to learn all the faces and names. With TAs embedded in the lecture, the personal attention they get in the lecture could be similar when comparing the two sizes, but there is still the difference of the prof specifically knowing who you are.

- Rethink the space completely and have a studio or SCALE-UP style of classroom, where students sit around tables in larger groups (9 is common) and can subdivide into smaller groups when the task require it. This would mean that 75 is the only practical choice of the two sizes. This type of setup facilitates transitioning back and forth between “lecture” and lab, but it is not uncommon for “lecture” and lab to have their own scheduled time as is the case in most introductory physics classrooms.

Going with 75 students is going to require additional resources or for program resources to be re-allocated, so the benefits of the smaller size need to clearly outweigh this additional resource cost. My knee-jerk reaction was that 75 is clearly better because smaller class sizes are better (this seems to be the mantra at smaller institutions), but I got over that quickly and am trying to determine specifically what additional benefits can be offered to the students if the class size is 75 instead of 150. But I am also trying to figure out what additional benefits could we bring to the student if we took the resources that would be needed to support class sizes of 75 and moved them somewhere else within the cohort.

What do you think about trying to argue between these two numbers? Have you taught similar sizes and can offer your perspective? I realize that there are so many possible factors, but I would like to hear which things might be important from your perspective.

Help me figure out which article to write

Posted: September 9, 2013 Filed under: Uncategorized 8 CommentsI have had four paper proposals accepted to the journal Physics in Canada, which is the official journal of the Canadian Association of Physicists. I will only be submitting one paper and would love to hear some opinions on which one to write and submit. I will briefly summarize what they are looking for according to the call for papers and then summarize my own proposals.

Note: My understanding is that the tone of these would be similar to articles appearing in the Physics Teacher.

Call for Papers

Call for papers in special issue of Physics in Canada on Physics Educational Research (PER) or on teaching practices:

- Active learning and interactive teaching (practicals, labatorials, studio teaching, interactive large classes, etc.)

- Teaching with technology (clickers, online homework, whiteboards, video- analysis, etc)

- Innovative curricula (in particular, in advanced physics courses)

- Physics for non-physics majors (life sciences, engineers, physics for non-scientists)

- Outreach to high schools and community at large

The paper should be 1500 maximum.

My proposals

“Learning before class” or pre-class assignments

- This article would be a how-to guide on using reading and other types of assignments that get the students to start working with the material before they show up in class (based on some blog posts I previously wrote).

Use of authentic audience in student communication

- Often, when we ask student to do some sort of written or oral communication, we ask that they target that communication toward a specific imagined audience, but the real audience is usually the grader. In this article I will discuss some different ideas (some I have tried, some I have not) to have student oral and written tasks have authentic audiences; audiences that will be the target audience and actually consume those communication tasks. This follows on some work I did this summer co-facilitating a writing across the curriculum workshop based on John Bean’s Engaging Ideas

Making oral exams less intimidating

- This would be based on a blog post and conference presentation that I gave last year on kinder, gentler oral exams.

Update your bag of teaching practices

- This would be a summary of (mostly research-informed) instructional techniques that your average university might not be aware of. I would discuss how they could be implemented in small and large courses and include appropriate references for people that wanted to learn more. Techniques I had in mind include pre-class assignments, group quizzes and exams, quiz reflection assignments, using whiteboards in class, and clicker questions beyond one-shot ConcepTests (for example, embedding clicker questions in worked examples).

Your help

And where you come in is to provide me with a bit of feedback as to which article(s) would potentially be of the most interest to an audience of physics instructors that will vary from very traditional to full-on PER folks.

I have a new job at UBC

Posted: August 12, 2013 Filed under: Uncategorized 6 CommentsDear friends. I am very excited to let you know that at the end of this week I will have officially started my new job as a tenure-track instructor in the department of physics and astronomy at the University of British Columbia.

This is the department from which I received my PhD, so it is sort of like going home. The department has a great nucleus of Physics Education Research researchers, dabblers and enthusiasts, and thanks mostly to the Carl Wieman Science Education Initiative, there is also a large discipline-based science education research community there as well. I have a lot of wonderful colleagues at UBC and I feel very fortunate to start a job at a new place where it should already feel quite comfortable from the moment I start.

A major portion of my job this coming year is going to be curriculum development for a new first-year international student college (called Vantage). I will be working with folks like myself from physics, chemistry and math, as well as academic English language instructors to put together a curriculum designed to get prepare these students for second-year science courses. I will be teaching sections of the physics courses for Vantage College and bringing my education research skills to bear on assessing its effectiveness as the program evolves over the first few years. Plus I will be teaching all sorts of exciting physics courses in the department of physics and astronomy.

The hardest part about leaving UFV is leaving my very supportive colleagues and leaving all my students that have not yet graduated. Fortunately it will be easy for me to head back for the next couple of years to see them walk across the stage for convocation (and not have to sit on stage cursing that coffee that I drank).

Stay tuned for some new adventures from the same old guy.

Recent Comments